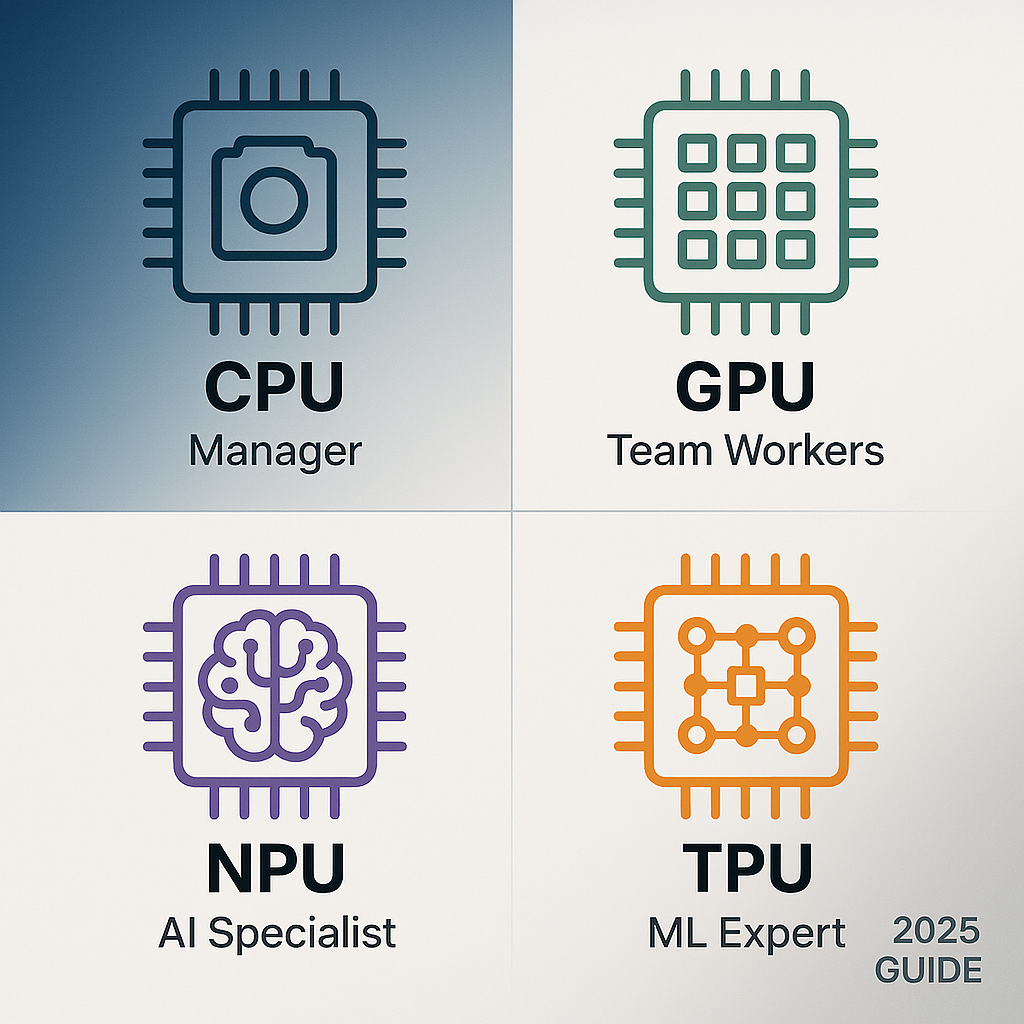

Understanding CPUs, GPUs, NPUs, and TPUs: A Simple Guide to Processing Units

🧠 Ever wondered why your phone has multiple "brains"? From CPUs managing daily tasks to NPUs powering AI features, each processor has a unique job. Our complete guide breaks down CPU vs GPU vs NPU vs TPU in simple terms—plus what's coming next in 2025!

The computer sitting on your desk or the phone in your pocket contains multiple tiny brains working together. Each brain has a different job, much like how different people in an office handle different tasks. Some people work alone on complex problems while others work in teams on simple, repetitive tasks.

These computer brains are called processing units, and understanding them helps you see why modern technology works the way it does. We'll explore four main types: CPU, GPU, NPU, and TPU.

The CPU: Your Computer's Main Brain

Think of the Central Processing Unit (CPU) as the manager of your computer. This manager handles every task that keeps your system running, from opening files to running programs. The CPU acts like a skilled worker who can tackle any job but prefers to work on one thing at a time.

The CPU contains a small number of powerful cores, usually between 2 and 64 in modern computers. Each core operates like a master craftsman who works with great precision and speed. When you click on an application or save a document, the CPU springs into action.

Modern CPUs use something called cache memory, which works like a desk drawer where the manager keeps frequently used tools. This cache comes in layers labeled L1, L2, and L3, with L1 being the fastest but smallest. The CPU can grab information from cache much faster than from your computer's main memory.

CPUs excel at tasks that require complex decision-making and quick switching between different jobs. When you browse the web while listening to music and checking email, your CPU manages all these activities smoothly. However, CPUs struggle with tasks that involve doing the same calculation thousands of times, which brings us to our next processing unit.

The GPU: The Parallel Processing Powerhouse

The Graphics Processing Unit (GPU) works completely differently from a CPU. While a CPU has a few powerful cores, a GPU contains thousands of smaller, simpler cores. Imagine the difference between having 8 expert chefs and having 2,000 line cooks who each know how to do one specific task very well.

Originally, GPUs handled graphics and visual effects for games and videos. They excelled at drawing millions of pixels on your screen because each pixel could be processed separately. The GPU could work on different parts of an image at the same time, which made graphics smooth and fast.

Then researchers discovered something interesting. The same parallel processing that made graphics beautiful also worked perfectly for artificial intelligence and machine learning. Training an AI model involves doing millions of similar mathematical calculations, exactly what GPUs do best.

The architecture of GPUs focuses on throughput rather than individual speed. Each core in a GPU runs slower than a CPU core, but having thousands of them working together creates incredible computing power. This design makes GPUs ideal for tasks like mining cryptocurrency, processing large datasets, and training neural networks.

GPUs come in two forms: integrated and discrete. Integrated GPUs share memory with the CPU and consume less power, making them suitable for laptops and basic graphics tasks. Discrete GPUs have their own dedicated memory and cooling systems, providing much higher performance for demanding applications.

The NPU: AI's Specialized Assistant

Neural Processing Units (NPUs) represent the newest evolution in processing technology. These chips focus entirely on artificial intelligence tasks, particularly running AI models on your device rather than in the cloud.

NPUs use an architecture that mimics how the human brain processes information. Instead of following traditional computing patterns, NPUs optimize for the specific mathematical operations that neural networks require. They excel at tasks like image recognition, voice processing, and language translation.

The key advantage of NPUs lies in their energy efficiency. While GPUs consume significant power when running AI tasks, NPUs accomplish similar work using much less electricity. This efficiency makes them perfect for smartphones, tablets, and other battery-powered devices.

Companies like Apple, Qualcomm, and Intel now include NPUs in their processors. When you use features like voice recognition on your phone or real-time photo enhancement, an NPU often handles these tasks. The NPU processes this information locally on your device, which improves privacy and reduces the need for internet connectivity.

NPUs represent a shift toward "edge computing," where AI processing happens on your device rather than on remote servers. This approach reduces delays and keeps your personal data more secure.

The TPU: Google's AI Specialist

Tensor Processing Units (TPUs) take specialization even further than NPUs. Google developed TPUs specifically for machine learning workloads, particularly for training and running large neural networks.

TPUs use a unique architecture called a systolic array, which integrates memory and processing units onto a single chip. This design eliminates many of the bottlenecks that slow down traditional processors when handling AI tasks. The result is dramatically faster performance for specific types of calculations.

Google uses TPUs to power many of its services, including Search, Photos, and Google Translate. The company also makes TPUs available through its cloud platform, allowing other organizations to rent this specialized computing power.

The newest TPU generation, called Trillium, delivers performance that's several times faster than previous versions. Google recently announced plans for even more powerful TPUs coming in 2025 and beyond, including a system called Ironwood that will contain over 9,000 TPU chips working together.

TPUs excel at both training AI models and running them in production. However, they work best with Google's TensorFlow framework and may not support all types of machine learning algorithms.

Why Multiple Processing Units Exist

You might wonder why we need all these different types of processors. The answer lies in the fundamental trade-offs of computer design.

General-purpose processors like CPUs can handle any task but aren't optimized for specific workloads. Specialized processors like GPUs, NPUs, and TPUs sacrifice flexibility for performance in their target applications.

Consider this analogy: a Swiss Army knife can perform many tasks, but specialized tools work better for specific jobs. You wouldn't use a Swiss Army knife to build a house, just as you wouldn't use a CPU to train a large AI model if better alternatives exist.

Different tasks require different approaches to processing. Sequential tasks that involve complex logic work best on CPUs. Parallel tasks with simple operations suit GPUs. AI inference on mobile devices fits NPUs perfectly. Large-scale AI training and deployment benefit from TPUs.

Modern computers often combine multiple processing units to handle diverse workloads efficiently. Your smartphone might contain a CPU for general tasks, a GPU for graphics, and an NPU for AI features. High-end workstations might include powerful CPUs, multiple GPUs, and specialized accelerators.

The Current State of Processing Technology

As of 2025, the processing landscape continues evolving rapidly. NVIDIA dominates the GPU market with its Blackwell architecture, which offers significant improvements in both performance and energy efficiency. The company plans to release even more powerful chips in the coming years.

AMD challenges NVIDIA with its MI400 series chips, promising competitive performance at lower costs. Companies like Intel, Google, and Amazon develop their own specialized processors to reduce dependence on external suppliers.

The rise of artificial intelligence drives much of this innovation. As AI models become larger and more complex, they require increasingly powerful hardware. This demand pushes companies to create faster, more efficient processors.

Edge computing represents another major trend. Instead of processing everything in massive data centers, companies want to run AI on phones, cars, and other devices. This shift requires processors that balance performance with power efficiency.

Looking Toward the Future

The next few years will bring exciting developments in processing technology. Several trends are shaping this evolution.

Neuromorphic computing attempts to create processors that work more like biological brains. These chips could be incredibly energy-efficient while handling AI tasks that current processors struggle with.

Quantum processing represents a completely different approach to computation. While still experimental, quantum computers might solve certain problems exponentially faster than traditional processors.

Custom silicon continues gaining popularity. Major technology companies increasingly design their own processors rather than relying on general-purpose chips. This approach allows optimization for specific workloads and reduces costs at scale.

The integration of different processing types on single chips will expand. Future processors might combine CPU, GPU, NPU, and other specialized units on one piece of silicon, creating incredibly versatile and efficient systems.

Optical computing could revolutionize how processors handle certain calculations. Using light instead of electricity for some operations might dramatically increase speed while reducing power consumption.

Practical Implications for Users

Understanding these processing units helps you make better technology decisions. When buying a computer, consider what tasks you'll perform most often.

- For general productivity work like web browsing, document editing, and media consumption, a modern CPU with integrated graphics suffices. Look for processors with good single-core performance and adequate cache memory.

- For gaming, video editing, or other graphics-intensive tasks, invest in a discrete GPU. The amount of video memory and the number of processing cores determine performance in these applications.

- For AI development or machine learning work, GPUs currently offer the best combination of performance and software support. NVIDIA's CUDA platform provides excellent compatibility with most AI frameworks.

- For mobile devices, processors with integrated NPUs provide better battery life when using AI features. These chips enable capabilities like real-time photo enhancement and voice recognition without draining your battery quickly.

- For cloud-based AI applications, consider services that offer access to specialized processors like TPUs. These can provide excellent performance for specific workloads without requiring large upfront investments.

The Bigger Picture

The evolution of processing units reflects the changing nature of computing itself. Early computers focused on calculation and data processing. Modern systems emphasize parallel processing, artificial intelligence, and real-time responsiveness.

This shift affects entire industries. Healthcare uses AI chips for medical imaging and drug discovery. Automotive companies rely on specialized processors for autonomous driving. Financial services use AI accelerators for fraud detection and algorithmic trading.

The environmental impact of computing also drives processor development. More efficient chips reduce energy consumption in data centers, which currently consume several percent of global electricity production.

As artificial intelligence becomes more prevalent in daily life, specialized processors will become even more important. The ability to run AI models efficiently and privately on personal devices will shape how we interact with technology.

Understanding CPUs, GPUs, NPU, and TPUs prepares you for a future where these technologies become increasingly integrated into everyday devices and services. Whether you're a technology professional, student, or simply curious about how modern devices work, this knowledge provides a foundation for navigating our increasingly digital world.

The processing revolution continues, driven by our insatiable demand for faster, smarter, and more efficient computing. Each type of processor plays a crucial role in this ecosystem, working together to enable the technological marvels that define our modern world.