Grok AI Fundamentals: Core Concepts, Capabilities, and Technical Foundation

Definition and Purpose

Grok AI represents a significant evolution in conversational artificial intelligence, developed by xAI with the explicit goal of creating a more engaging, useful, and versatile AI assistant. At its core, Grok is a large language model (LLM) designed to understand and generate human language, answer questions, solve problems, and assist with a wide range of tasks through natural conversation.

What distinguishes Grok from many of its predecessors is its integrated access to real-time information via the internet, allowing it to provide current answers on recent events and developments beyond its training data. This capability addresses one of the most significant limitations of traditional LLMs—their knowledge cutoff dates.

The name "Grok" itself comes from Robert A. Heinlein's 1961 science fiction novel "Stranger in a Strange Land," where it means "to understand completely and intuitively." This naming reflects the system's aspiration to develop a deep, intuitive understanding of user queries and provide insightful, helpful responses that go beyond simple information retrieval.

Grok was designed with several core purposes in mind:

- To provide an AI assistant that can access and incorporate real-time information from the internet

- To deliver a more engaging, conversational experience with what xAI describes as a "rebellious" personality

- To offer an alternative to existing AI systems that Elon Musk has characterized as overly cautious and politically biased

- To advance the development of artificial general intelligence (AGI) through a "maximum truth-seeking" approach

As an AI system, Grok combines pre-trained knowledge with real-time information access to serve as a comprehensive assistant for research, problem-solving, content creation, and various specialized tasks.

Historical Context

Grok AI emerged from a confluence of technological developments and specific vision for how AI assistants should function:

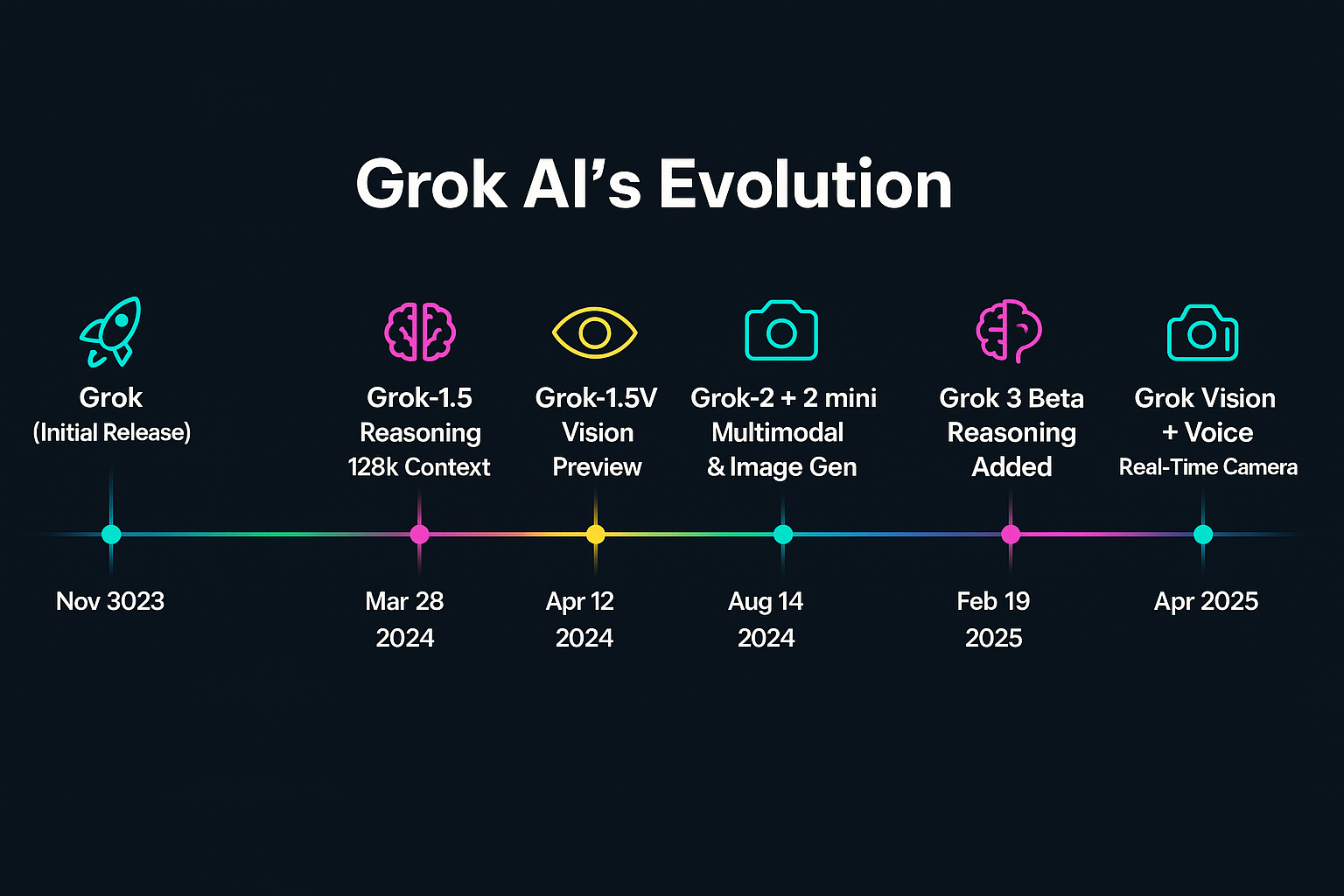

Early Development (2023):

- Following Elon Musk's acquisition of Twitter (now X) and his departure from OpenAI (which he co-founded), Musk established xAI in March 2023 with the stated purpose of understanding the "true nature of the universe" and developing AI that is an alternative to the approaches of companies like OpenAI and Anthropic.

- The xAI team was assembled with researchers and engineers from organizations including DeepMind, OpenAI, Google Research, Microsoft Research, and Tesla.

Initial Release:

- Grok-1 was announced on November 4, 2023, and made available to a limited group of users as part of X's Premium+ subscription service.

- This first release positioned Grok as a conversational AI with real-time knowledge access via web browsing capabilities and a distinctive personality described as "rebellious" and willing to answer questions that other AI systems might refuse.

Subsequent Development:

- In March 2024, xAI announced Grok-1.5, a significant update that improved reasoning capabilities, reduced hallucinations, and enhanced the model's ability to follow complex instructions.

- April 2024 saw the introduction of Grok-1.5V, which added multimodal capabilities, allowing the model to understand and process images alongside text.

- Throughout 2024, xAI has continued to expand access to Grok while enhancing its capabilities and fine-tuning its performance across various tasks.

This rapid development timeline reflects xAI's aggressive approach to advancing AI technology and challenging established players in the field. Grok's evolution has been characterized by quick iterations and a focus on capabilities that differentiate it from competitors.

xAI Background

xAI (pronounced "X AI") is an artificial intelligence company founded by Elon Musk in March 2023, with the official announcement of the company coming in July 2023. The company was established with a distinctive mission that reflects Musk's vision for AI development.

Company Mission: xAI's stated mission is to "understand the true nature of the universe." More practically, the company aims to develop AI systems that:

- Pursue "maximum truth-seeking"

- Provide an alternative to what Musk has characterized as overly cautious and politically biased AI from other major developers

- Advance the path toward artificial general intelligence (AGI)

Leadership and Team:

- Founder and Executive Chairman: Elon Musk

- CEO: As of early 2025, leadership continues to evolve

- The technical team includes AI researchers and engineers with backgrounds from leading organizations in the field, including DeepMind, OpenAI, Google Research, Microsoft Research, and Tesla's Autopilot team.

Strategic Positioning: xAI has positioned itself as an independent AI company that nonetheless maintains close ties with X (formerly Twitter) and other Musk-led companies. This relationship with X has provided Grok with a ready distribution platform through X's Premium+ subscription service.

Funding and Resources:

- In December 2023, xAI announced it had raised $6 billion in funding, with investors including Sequoia Capital, Andreessen Horowitz, Fidelity Management, and others.

- The company has access to significant computing resources, which are essential for training and running advanced AI models like Grok.

Research Focus: Beyond developing Grok as a product, xAI has indicated research interests in several areas:

- Reasoning capabilities in large language models

- Truthfulness and bias mitigation

- Multimodal understanding (text, images, and potentially other modalities)

- AI alignment and safety

xAI represents a significant new entrant in the competitive landscape of AI research and development, with substantial resources and a distinctive philosophical approach that shapes the development of Grok AI.

Grok's Position in the AI Landscape

Grok occupies a distinctive position in the evolving landscape of large language models and AI assistants:

Competitive Positioning: Grok competes directly with other leading AI assistants, including:

- OpenAI's ChatGPT and GPT models

- Anthropic's Claude

- Google's Gemini (formerly Bard)

- Meta's Llama-based assistants

Within this competitive field, Grok has carved out a distinctive identity based on several differentiating factors.

Technological Positioning:

- Grok is among the more advanced general-purpose LLMs, though benchmarks suggest it currently performs slightly behind the most capable models like GPT-4 and Claude 3 Opus on various reasoning and knowledge tasks.

- Its integrated real-time information access places it among the models that directly address the knowledge cutoff limitation of traditional LLMs.

- Grok's development timeline shows rapid iteration and improvement, indicating xAI's commitment to quickly enhancing its capabilities.

Philosophical Positioning:

- Grok has been explicitly positioned as a "maximum truth-seeking" AI, with xAI emphasizing its willingness to engage with controversial topics that other AI systems might avoid.

- The model reflects Musk's stated concerns about what he perceives as political bias in other AI systems, offering what xAI characterizes as a more politically neutral alternative.

- Grok's "rebellious" personality represents a deliberate departure from the more cautious, neutral tone adopted by many competing AI assistants.

Market Positioning:

- Initially available exclusively to X Premium+ subscribers, Grok has a more restricted access model than many competitors, though this is likely to evolve as xAI expands its availability.

- The close integration with X (formerly Twitter) provides a distinctive distribution channel that leverages Musk's existing technology ecosystem.

- Grok's association with Elon Musk gives it high visibility and a ready audience among Musk's substantial following, while also potentially polarizing perception among those with varying opinions about Musk.

Developmental Trajectory: Grok appears to be on a path of rapid capability expansion, with xAI demonstrating a commitment to quick iterations and feature additions. This suggests a trajectory that will likely see Grok continue to evolve as a significant player in the AI assistant space, potentially with increased focus on enterprise applications as the model matures.

The model represents an important new entrant in a field that continues to develop at an extraordinary pace, adding a distinctive voice and approach to the ongoing conversation about how AI assistants should function and what role they should play in society.

Technical Foundation

Model Architecture

Grok AI is built on a transformer-based architecture, the dominant paradigm for large language models since the publication of the "Attention is All You Need" paper by Vaswani et al. in 2017. While xAI has not published comprehensive technical details about Grok's specific architecture, we can outline its likely structure based on available information and typical approaches in state-of-the-art LLMs.

Core Architecture Components:

- Transformer Foundation:

- Like other modern LLMs, Grok utilizes a transformer architecture with self-attention mechanisms that allow the model to weigh the importance of different words in context.

- The model likely employs a decoder-only transformer architecture similar to GPT models, which is optimized for text generation tasks.

- This architecture allows Grok to process input text and generate appropriate responses by predicting the most likely next tokens in a sequence.

- Attention Mechanisms:

- Multi-head attention mechanisms enable the model to focus on different aspects of the input simultaneously.

- This allows Grok to capture complex relationships between words and concepts across long contexts.

- The attention patterns learned during training help the model understand syntax, semantics, and factual relationships.

- Layer Structure:

- Grok consists of multiple transformer layers stacked on top of each other, with each layer processing the output of the previous layer.

- Each layer includes attention mechanisms and feed-forward neural networks.

- Layer normalization and residual connections likely help stabilize training and improve performance.

- Context Window:

- Grok has a context window of approximately 8,000 tokens, allowing it to process and maintain awareness of relatively long conversations and documents.

- This context management is crucial for maintaining coherence in extended interactions.

- Tokenization:

- Like other LLMs, Grok processes text by breaking it down into tokens, which may be words, parts of words, or individual characters depending on the tokenization scheme.

- The model was likely trained using a vocabulary of tens of thousands of tokens, allowing it to represent a wide range of text efficiently.

- Web Browsing Capability:

- A distinctive architectural feature of Grok is its integration with web browsing capabilities.

- This likely involves specialized components that:

- Translate user queries into appropriate search queries

- Process and extract information from search results and web pages

- Integrate this information with the model's existing knowledge

- Format responses that incorporate this real-time information

- Multimodal Extensions (in newer versions):

- Grok-1.5V introduced multimodal capabilities, allowing the model to process images alongside text.

- This likely involves additional architectural components for visual processing, such as vision transformers or convolutional neural networks, and mechanisms to align visual and textual representations.

The architecture represents a sophisticated integration of transformer-based language modeling with specialized components for real-time information access and, in newer versions, multimodal understanding. This foundation enables Grok's core capabilities while establishing a framework that can be expanded and enhanced in future iterations.

Training Methodology

The training of Grok AI follows a multi-stage process typical of large language models, though with specific approaches that reflect xAI's priorities and technical philosophy. While xAI has not disclosed comprehensive details about Grok's training, we can outline the likely methodology based on available information and standard practices in the field.

Pre-training Phase:

- Data Collection and Curation:

- Grok was trained on a diverse corpus of text drawn from the internet and other sources, likely including books, articles, websites, code repositories, and other text data.

- xAI has stated that Grok-1 was "trained on a large amount of text data from the web and other public information." This suggests a broad data collection approach similar to other large language models.

- The data curation process likely involved filtering for quality and removing certain types of harmful or problematic content, though xAI's stated philosophy suggests potentially different filtering criteria than those used by companies like OpenAI or Anthropic.

- Self-supervised Learning:

- Like other modern LLMs, Grok was likely pre-trained using self-supervised learning objectives, primarily next-token prediction.

- This involves training the model to predict the next word in a sequence given the previous words, allowing it to learn patterns, relationships, and knowledge from the training data without requiring explicit labeling.

- This pre-training process enables the model to develop general language understanding and knowledge representation.

- Distributed Training Infrastructure:

- Training a model of Grok's scale requires substantial computational resources.

- xAI likely employed distributed training across multiple GPUs or TPUs, with specialized software to coordinate the training process across this hardware.

- Optimization techniques like mixed-precision training were probably used to improve efficiency.

Fine-tuning and Alignment Phase:

- Supervised Fine-tuning (SFT):

- After pre-training, Grok was likely fine-tuned on examples of high-quality, helpful responses to various user queries.

- This process helps align the model's outputs with human preferences and expectations for an AI assistant.

- The fine-tuning dataset likely included examples showing how to follow instructions, provide helpful information, and engage in natural conversation.

- Reinforcement Learning from Human Feedback (RLHF):

- xAI probably employed RLHF techniques to further refine Grok's responses.

- This involves collecting human preferences between different model outputs, training a reward model based on these preferences, and then using reinforcement learning to optimize the model toward higher reward outputs.

- Given xAI's stated philosophy, their RLHF process may have emphasized different values than other AI companies, potentially prioritizing "maximum truth-seeking" and a willingness to engage with a wider range of topics.

- Safety Alignment:

- While maintaining its "rebellious" nature, Grok still required safety guardrails to prevent genuinely harmful outputs.

- xAI likely implemented specialized training and filtering to prevent the model from producing content that could enable illegal activities, cause direct harm, or violate basic ethical standards.

- This safety alignment process may have been calibrated differently than competing models, reflecting xAI's stated goal of creating a less restrictive AI.

Specialized Capability Training:

- Web Browsing Integration:

- A distinctive aspect of Grok's training would have been developing its capability to effectively access and utilize real-time information.

- This likely involved specialized training on:

- Generating effective search queries based on user questions

- Extracting relevant information from search results and web pages

- Synthesizing this information with the model's existing knowledge

- Providing appropriate attribution and handling conflicting information

- Multimodal Training (for Grok-1.5V):

- The multimodal version of Grok required additional training on image-text pairs to develop the ability to understand and discuss visual content.

- This likely involved specialized datasets containing images paired with descriptive text, captions, or related discussions.

- Continuous Improvement:

- Grok's rapid version iterations suggest an ongoing training methodology that incorporates new data, techniques, and capabilities.

- This likely includes analyzing user interactions to identify areas for improvement and updating the model accordingly.

This multi-stage training methodology has enabled Grok to develop its distinctive capabilities while reflecting xAI's philosophical approach to AI development.

Parameter Scale

The parameter scale of a large language model is a crucial factor in determining its capabilities, as it directly influences the model's capacity to learn patterns, store knowledge, and generate coherent, contextually appropriate responses. While xAI has not publicly disclosed the exact parameter count for Grok, we can provide informed estimates based on its performance and comparisons with other models.

Parameter Count Estimates:

Based on performance benchmarks and comparisons with other models, Grok-1 is estimated to have between 100 billion and 175 billion parameters. This places it in the same general scale as models like:

- GPT-4 (estimated to have approximately 1 trillion parameters)

- Claude 2 (estimated to have over 100 billion parameters)

- PaLM 2 (estimated at 340 billion parameters)

The subsequent Grok-1.5 likely maintained or slightly increased this parameter count, with potential architecture improvements to enhance efficiency and capability per parameter.

Significance of Parameter Scale:

- Knowledge Capacity:

- With a parameter count in the hundreds of billions, Grok has substantial capacity to store factual knowledge, linguistic patterns, and reasoning capabilities.

- This scale allows the model to "memorize" vast amounts of information from its training data, enabling it to answer questions across diverse domains without always needing to access external information.

- Reasoning Depth:

- Larger parameter counts generally correlate with improved reasoning capabilities, allowing Grok to handle more complex logical problems, follow multi-step instructions, and maintain coherence across longer contexts.

- The scale of Grok appears sufficient to support sophisticated reasoning, though benchmarks suggest it may not yet match the most advanced models in this regard.

- Generative Capability:

- Grok's parameter scale enables it to generate diverse, contextually appropriate text across various styles, formats, and domains.

- This includes creative writing, technical explanations, conversational responses, and code generation, among other capabilities.

- Computational Requirements:

- A model with hundreds of billions of parameters requires substantial computational resources for both training and inference.

- For inference (running the model to generate responses), this necessitates high-performance hardware, which may influence deployment options and access models.

- Evolutionary Context:

- Grok's parameter scale represents a significant but not revolutionary step in the ongoing scaling of large language models.

- It reflects xAI's approach of building a competitive model while potentially focusing on other aspects of development, such as real-time information access, rather than simply maximizing parameter count.

Efficiency Considerations:

It's important to note that raw parameter count is not the only determinant of model capability. Recent developments in the field have demonstrated that:

- Architectural improvements can increase efficiency, allowing smaller models to perform comparably to larger ones.

- Specialized training approaches can enhance performance without increasing parameter count.

- Models like Grok that integrate external knowledge sources (web browsing) may achieve greater effective capability with fewer parameters than fully self-contained models.

The parameter scale of Grok represents a significant investment in model capacity, placing it among the more advanced language models available while still being exceeded by the very largest models in the field. This scale supports Grok's broad capabilities while working in conjunction with its architectural design and training methodology to determine its overall performance characteristics.

Language Processing Approach

Grok AI processes language through a sophisticated approach that combines statistical pattern recognition with contextual understanding, enabling it to interpret user inputs and generate appropriate responses. This approach involves several key components and processes:

Tokenization and Input Processing:

- Tokenization:

- Grok breaks down input text into tokens, which may be words, parts of words, or individual characters depending on the tokenization scheme.

- This tokenization likely uses a subword tokenization method similar to Byte-Pair Encoding (BPE) or SentencePiece, which can efficiently handle words not seen during training.

- Each token is converted into a numerical vector representation that the model can process.

- Embedding:

- These token representations are then transformed into embeddings—dense vector representations that capture semantic meaning.

- The embedding space allows the model to represent similar words or concepts as numerically similar vectors.

- These embeddings serve as the input to the transformer layers of the model.

Contextual Understanding:

- Attention Mechanisms:

- Grok uses self-attention mechanisms to analyze relationships between all tokens in the input.

- This allows the model to consider the entire context when interpreting each word or phrase.

- Multiple attention heads allow the model to focus on different aspects of the relationships between words simultaneously.

- Contextual Representation:

- As information flows through the model's layers, each token's representation is continuously updated based on its context.

- This creates rich, contextual representations that capture the meaning of words as they are used in specific contexts.

- Later layers in the model develop increasingly abstract and sophisticated representations of the text.

- Long-context Processing:

- With a context window of approximately 8,000 tokens, Grok can maintain awareness of lengthy conversations or documents.

- This allows it to refer back to information mentioned earlier in a conversation and maintain coherence across extended interactions.

Response Generation:

- Autoregressive Generation:

- Grok generates responses one token at a time in an autoregressive manner.

- Each new token is predicted based on all previously generated tokens and the original input.

- This sequential generation process allows the model to create coherent, contextually appropriate text.

- Sampling Strategies:

- The model uses sophisticated sampling strategies to determine which token to output next.

- These likely include temperature controls (adjusting randomness), top-p sampling (limiting choices to the most probable tokens), and other techniques to balance creativity with coherence.

- Different sampling approaches may be used depending on the nature of the task (e.g., more deterministic for factual questions, more creative for open-ended generation).

- Stopping Criteria:

- The model employs various methods to determine when to end a response, including recognizing natural ending points and respecting length constraints.

Special Handling Mechanisms:

- Query Analysis:

- Grok analyzes user queries to determine their intent, required expertise domain, and whether they need current information.

- This analysis helps the model decide whether to rely on its internal knowledge or activate its web browsing capability.

- Knowledge Integration:

- When using web browsing, Grok must integrate information from external sources with its internal knowledge.

- This involves resolving potential contradictions, assessing source reliability, and presenting a coherent synthesis.

- Format Recognition:

- The model can recognize and generate text in various formats, including natural language, code, lists, tables, and structured data.

- This requires understanding format-specific conventions and syntax.

- Multilingual Processing:

- Grok has capabilities for processing multiple languages, though its primary strength is in English.

- This requires understanding the syntax, semantics, and unique characteristics of different languages.

- Safety Filtering:

- Grok implements various safety mechanisms to filter potentially harmful outputs.

- This includes detecting and avoiding prohibited content categories while still maintaining its "rebellious" approach to answering questions more broadly than some competitors.

Grok's language processing approach represents a sophisticated integration of these components, enabling it to understand complex queries, access and process relevant information, and generate appropriate responses across a wide range of topics and tasks.

Core Capabilities

Natural Language Understanding

Grok AI demonstrates sophisticated natural language understanding (NLU) capabilities that allow it to interpret user inputs with nuance and accuracy. This understanding forms the foundation for all of Grok's functionality, enabling it to process queries, detect intent, and generate appropriate responses.

Semantic Understanding: Grok can grasp the meaning behind words and phrases, not just their literal definitions. This semantic understanding allows the model to:

- Interpret ambiguous language: When a word or phrase has multiple potential meanings, Grok can usually determine the intended meaning based on context.

- Recognize synonyms and paraphrases: The model understands that different phrasings can express the same underlying concept, allowing it to respond appropriately regardless of how a question is formulated.

- Process figurative language: Grok can interpret metaphors, similes, idioms, and other non-literal expressions, though with varying degrees of accuracy depending on the complexity and cultural specificity of the expression.

- Recognize entities and concepts: The model identifies people, places, organizations, dates, and abstract concepts mentioned in text, understanding their significance and relationships.

Syntactic Processing: Grok demonstrates strong syntactic understanding, allowing it to:

- Parse complex sentences: The model can unravel nested clauses, complex sentence structures, and grammatical nuances.

- Handle grammatical variations: Grok can process text with varying grammatical structures, tenses, and voices, extracting the core meaning regardless of syntax.

- Recognize parts of speech: The model understands the functions of different words within sentences, distinguishing between nouns, verbs, adjectives, and other parts of speech.

- Process queries with linguistic errors: Grok can often understand the intent behind inputs containing typos, grammatical errors, or unusual phrasing.

Intent Recognition: A crucial aspect of Grok's natural language understanding is its ability to recognize user intent:

- Query classification: The model categorizes inputs into different types of requests (questions, instructions, conversation, creative tasks, etc.) and responds accordingly.

- Domain identification: Grok can determine which knowledge domain a query relates to (science, history, technology, arts, etc.), allowing it to draw on relevant information.

- Specificity assessment: The model evaluates how specific or open-ended a query is, adjusting its response style and depth accordingly.

- Implicit requests: Grok can often identify unstated but implied requests in user inputs, responding to the underlying need rather than just the explicit question.

Contextual Understanding: Grok maintains contextual awareness throughout conversations:

- Reference resolution: The model can resolve pronouns and other references to previously mentioned entities, maintaining coherence across conversation turns.

- Topic tracking: Grok follows topic shifts and maintains awareness of the conversation's subject matter, even in lengthy interactions.

- User state awareness: The model attempts to track implied user needs, knowledge level, and satisfaction throughout a conversation.

- Temporal understanding: Grok can process time-related language and maintain awareness of temporal relationships mentioned in conversation.

Multi-turn Comprehension: In extended conversations, Grok demonstrates:

- Conversational memory: The model maintains awareness of information shared earlier in the conversation, avoiding redundancy and building on established context.

- Progressive elaboration: Grok can handle follow-up questions that add constraints or specifications to earlier queries.

- Clarification processing: When given clarifications or corrections, the model can update its understanding of the user's intent.

- Coherent threading: The model maintains logical and topical coherence across multiple conversation turns.

These natural language understanding capabilities, while impressive, do have limitations. Grok may struggle with highly implicit cultural references, extremely technical domain-specific language without proper context, or detecting subtle emotional nuances in text. However, its overall NLU capabilities provide a strong foundation for its function as an AI assistant, enabling it to process a wide range of queries with impressive accuracy and nuance.

Knowledge Retrieval

Grok AI employs sophisticated knowledge retrieval mechanisms that allow it to access and utilize information from both its pre-trained parameters and external sources. This dual approach to knowledge access represents one of Grok's distinctive capabilities.

Internal Knowledge Base:

Grok's primary knowledge source is the information encoded in its model parameters during training:

- Parametric Knowledge Storage:

- During training on vast text corpora, Grok encoded billions of facts, concepts, and relationships in its neural network parameters.

- This parametric knowledge spans diverse domains including science, history, literature, programming, current events (up to its training cutoff), and general world knowledge.

- Associative Retrieval:

- When processing a query, Grok activates relevant portions of its neural network, effectively "retrieving" knowledge related to the query.

- This retrieval is associative rather than lookup-based, allowing the model to connect concepts and generate insights beyond simple fact recall.

- Knowledge Organization:

- While not explicitly structured like a traditional database, Grok's internal knowledge appears to be organized in ways that respect taxonomic relationships, logical implications, and semantic associations.

- This implicit organization allows the model to navigate its knowledge effectively, even across domains.

- Knowledge Integration:

- Grok can combine facts from different domains to answer interdisciplinary questions or draw connections between seemingly unrelated topics.

- This integration capability demonstrates a level of knowledge synthesis beyond simple retrieval.

External Knowledge Access:

Grok's distinctive feature is its ability to supplement its internal knowledge with real-time information from the web:

- Query Transformation:

- When Grok determines that a query requires current information or details beyond its training data, it can transform the user's question into appropriate search queries.

- This transformation process identifies key search terms and formulates queries likely to retrieve relevant information.

- Web Search Execution:

- Grok executes these search queries against web search engines, retrieving a set of potentially relevant results.

- The model can likely adjust its search approach based on initial results, refining queries if needed.

- Content Extraction:

- From search results or specific web pages, Grok extracts relevant information, focusing on content that addresses the user's query.

- This extraction process involves identifying key passages, facts, or data points within sometimes lengthy or complex sources.

- Information Evaluation:

- Grok attempts to assess the reliability and relevance of information retrieved from external sources.

- This evaluation likely considers factors such as source credibility, consistency with known facts, and relevance to the query.

- Knowledge Synthesis:

- The model integrates information from multiple sources, resolving contradictions and presenting a coherent synthesis.

- This synthesis combines externally retrieved information with Grok's internal knowledge to provide comprehensive responses.

Limitations and Challenges:

Despite its sophisticated knowledge retrieval capabilities, Grok faces several challenges:

- Hallucination Risk:

- Like all current LLMs, Grok may occasionally "hallucinate" information, presenting incorrect facts with confidence.

- This risk exists for both internal knowledge retrieval and external information synthesis.

- Source Reliability:

- When accessing external information, Grok must navigate the varying reliability of web sources.

- The model may sometimes retrieve and present information from sources of questionable accuracy.

- Knowledge Currency:

- While web access helps with recent information, Grok's understanding of very recent events or rapidly changing situations may still be limited by search results quality and its ability to interpret them correctly.

- Knowledge Depth vs. Breadth:

- Grok balances broad knowledge across many domains with deep expertise in specific areas, sometimes resulting in limitations when highly specialized knowledge is required.

- Attribution Challenges:

- The model may sometimes struggle to properly attribute information to sources, particularly when synthesizing from multiple references.

Grok's knowledge retrieval capabilities represent a significant advancement in AI assistants, combining the advantages of parametric knowledge with the currency of web access. This hybrid approach allows Grok to provide more complete and up-to-date responses than models limited to their training data, while still leveraging the deep understanding encoded in its parameters.

Real-time Information Access

One of Grok AI's most distinctive capabilities is its ability to access and process real-time information from the internet, addressing a fundamental limitation of traditional large language models that are restricted to knowledge from their training data.

Technical Implementation:

Grok's real-time information access capability is implemented through a sophisticated system that includes:

- Query Analysis and Routing:

- Grok analyzes user queries to determine whether they require current information beyond its training data.

- The model assesses factors such as time-sensitivity, references to recent events, or explicit requests for current data.

- Based on this analysis, Grok decides whether to rely on its internal knowledge or activate its web browsing capability.

- Search Query Formulation:

- When web access is needed, Grok converts the user's question into effective search queries.

- This involves identifying key search terms, potential sources, and query formulations likely to yield relevant results.

- The model may generate multiple search queries for complex questions requiring information from different sources.

- Search Execution and Result Processing:

- Grok executes these search queries against web search engines, retrieving a set of potentially relevant results.

- The model processes search results to identify the most promising sources for the requested information.

- This may involve analyzing snippets, titles, and source credibility to prioritize which links to explore further.

- Web Page Content Extraction:

- From selected search results, Grok can access and process the content of web pages.

- The model extracts relevant information from these pages, focusing on content that addresses the user's query.

- This extraction process involves filtering out advertising, navigation elements, and other irrelevant content to focus on the substantive information.

- Information Synthesis and Integration:

- Grok synthesizes information from multiple web sources, resolving contradictions and inconsistencies when possible.

- The model integrates this web-sourced information with its existing knowledge to provide comprehensive responses.

- This integration process attempts to maintain factual accuracy while presenting information in a coherent, conversational manner.

- Attribution and Sourcing:

- When providing information from external sources, Grok can include citations or references to the original sources.

- This attribution helps users understand where information is coming from and facilitates further exploration if desired.

Types of Real-time Information:

Grok can access various types of current information, including:

- Recent Events and News:

- Updates on current events, breaking news, and recent developments across various domains.

- This allows Grok to discuss events that occurred after its training data cutoff.

- Current Data and Statistics:

- Up-to-date figures, statistics, prices, ratings, and other quantitative information.

- Examples include current stock prices, sports scores, weather conditions, and economic indicators.

- Product Information and Reviews:

- Details about recently released products, services, and their reviews or ratings.

- This information helps Grok provide relevant recommendations and comparisons.

- Business and Organization Updates:

- Current information about companies, organizations, and their recent activities.

- This includes leadership changes, new initiatives, and other organizational developments.

- Technical Documentation:

- Access to current documentation for software, APIs, and technical systems.

- This allows Grok to provide up-to-date technical assistance and reference accurate specifications.

- Academic and Scientific Developments:

- Recent research findings, publications, and scientific advancements.

- This helps Grok discuss cutting-edge developments across scientific fields.

Advantages and Limitations:

The real-time information access capability provides several advantages:

- Overcoming Knowledge Cutoff:

- Grok can discuss events, developments, and information that emerged after its training data cutoff.

- This makes the model more useful for queries requiring current awareness.

- Factual Accuracy:

- Access to current information can improve the accuracy of Grok's responses on time-sensitive topics.

- The model can verify or update its internal knowledge with the latest information available online.

- Expanded Knowledge Domain:

- Web access effectively expands Grok's knowledge to include specialized or niche topics that might not have been well-represented in its training data.

- This allows the model to provide information on a wider range of subjects.

However, this capability also has limitations:

- Source Quality Variability:

- The reliability of web-sourced information depends on the quality of available sources.

- Grok may sometimes retrieve and present information from sources of varying credibility.

- Search Limitations:

- The quality of information retrieved depends on the effectiveness of Grok's search queries and the limitations of underlying search engines.

- Some information may be difficult to find if it's not well-indexed or prominently featured in search results.

- Interpretation Challenges:

- Extracting accurate information from web pages requires correct interpretation of sometimes complex or ambiguous content.

- Grok may occasionally misinterpret information, particularly from complex sources or on highly technical topics.

- Access Restrictions:

- Grok cannot access information behind paywalls, login requirements, or other access controls.

- This limits its ability to retrieve information from certain premium or restricted sources.

- Processing Time:

- Web browsing introduces some latency in response generation compared to queries answered from internal knowledge.

- Complex queries requiring multiple searches may take longer to process.

Despite these limitations, Grok's real-time information access represents a significant advancement in AI assistant capabilities, allowing it to provide more current and comprehensive responses than models limited to their training data.

Reasoning Abilities

Grok AI demonstrates sophisticated reasoning capabilities that enable it to process complex problems, follow multi-step instructions, and generate logically sound analyses. These reasoning abilities are central to Grok's function as an advanced AI assistant capable of handling intellectually demanding tasks.

Logical Reasoning:

Grok can apply principles of deductive and inductive logic to analyze problems and draw conclusions:

- Deductive Reasoning:

- The model can follow logical syllogisms and apply general principles to specific cases.

- It demonstrates the ability to recognize logical implications and draw valid conclusions from given premises.

- Example: Given assumptions about a system's rules, Grok can correctly determine what must follow from those rules.

- Inductive Reasoning:

- Grok can identify patterns from specific examples and generalize to broader principles.

- It can form reasonable hypotheses based on limited information, while usually acknowledging the probabilistic nature of such conclusions.

- Example: Given several examples of a pattern, Grok can predict how the pattern might continue.

- Abductive Reasoning:

- The model can generate plausible explanations for observations, considering multiple possible interpretations.

- It attempts to identify the most likely explanation for a given phenomenon based on available information.

- Example: Given symptoms of a system problem, Grok can suggest possible causes, typically starting with the most likely ones.

Analytical Problem-Solving:

Grok can break down complex problems into component parts and work through them systematically:

- Step-by-Step Analysis:

- When faced with multi-stage problems, Grok can decompose them into sequential steps and address each in turn.

- The model maintains awareness of its progress through a problem-solving sequence and builds upon earlier steps.

- Example: When solving a complex mathematical problem, Grok will typically show its work by proceeding through logical stages of calculation.

- Case Analysis:

- Grok can consider different possible scenarios or cases and analyze each separately.

- It demonstrates the ability to explore multiple branches of possibility in conditional problems.

- Example: Given a problem with several possible initial conditions, Grok can analyze the outcome for each condition.

- Constraint Satisfaction:

- The model can reason about problems with multiple constraints and identify solutions that satisfy all given conditions.

- It demonstrates understanding of how constraints interact and limit the solution space.

- Example: Given a scheduling problem with multiple requirements, Grok can propose arrangements that meet all specified constraints.

Numerical and Mathematical Reasoning:

Grok shows capability for mathematical thinking across various domains:

- Arithmetic and Basic Mathematics:

- The model can perform calculations, though its accuracy may decrease with very complex calculations.

- It demonstrates understanding of mathematical concepts like percentages, ratios, and basic statistics.

- Example: Grok can calculate compound interest, convert between units, or determine statistical measures for data sets.

- Mathematical Problem Formulation:

- Grok can translate word problems into mathematical expressions or equations.

- It shows ability to identify the appropriate mathematical approach for different problem types.

- Example: Given a verbal description of a physics scenario, Grok can set up the relevant equations.

- Probabilistic Reasoning:

- The model demonstrates understanding of probability concepts and can calculate probabilities for various scenarios.

- It shows awareness of concepts like independence, conditional probability, and expected value.

- Example: Grok can reason about the likelihood of different outcomes in games of chance or statistical scenarios.

Critical Thinking and Evaluation:

Grok demonstrates capabilities for evaluating information and arguments:

- Argument Analysis:

- The model can identify premises, conclusions, and the logical structure of arguments.

- It shows ability to recognize common logical fallacies and weaknesses in reasoning.

- Example: Given an argument, Grok can point out unwarranted assumptions or logical gaps.

- Evidence Evaluation:

- Grok attempts to assess the strength and relevance of evidence for claims.

- It demonstrates awareness of concepts like correlation vs. causation and statistical significance.

- Example: When presented with statistical claims, Grok can identify potential confounding factors or limitations.

- Source Credibility Assessment:

- When using its web browsing capability, Grok makes efforts to evaluate the reliability of sources.

- It shows awareness of factors that affect source credibility, though its implementation may be imperfect.

- Example: Grok might note when information comes from peer-reviewed research versus less authoritative sources.

Meta-Reasoning:

Grok demonstrates some capacity for reasoning about its own reasoning:

- Confidence Assessment:

- The model attempts to communicate its confidence in different parts of its reasoning.

- It shows willingness to acknowledge uncertainty when information is limited or conclusions are tentative.

- Example: Grok might indicate that certain conclusions are speculative or note when it lacks sufficient information for a definitive answer.

- Approach Selection:

- Grok can select appropriate reasoning strategies for different types of problems.

- It demonstrates flexibility in switching between reasoning approaches as needed.

- Example: The model might use different approaches for mathematical problems versus ethical dilemmas.

- Error Detection:

- In some cases, Grok can recognize and correct errors in its own reasoning.

- This self-correction ability is limited but represents an important aspect of its reasoning capability.

- Example: After working through a problem, Grok might recognize a mistake in its earlier steps and provide a correction.

While Grok's reasoning abilities are impressive, they do have limitations. The model may occasionally make errors in complex logical chains, struggle with highly abstract reasoning, or fail to recognize subtle logical fallacies. These limitations reflect the broader challenges in implementing human-like reasoning in current AI systems. Nevertheless, Grok's reasoning capabilities enable it to handle a wide range of intellectual tasks with considerable effectiveness.

Creative Generation

Grok AI demonstrates significant capabilities in creative content generation, allowing it to produce various forms of original, engaging, and contextually appropriate content based on user requests. This creative generation spans multiple domains and formats, making it valuable for diverse applications from entertainment to professional content creation.

Text-Based Creative Writing:

Grok can generate various forms of creative written content:

- Narrative Fiction:

- The model can create original short stories, fictional scenes, and narrative fragments across genres.

- It demonstrates understanding of story structure, character development, dialogue, and pacing.

- Example capabilities include writing short stories in specific genres, continuing stories from prompts, or creating character sketches.

- Poetry and Verse:

- Grok can generate various poetic forms, from free verse to structured formats like sonnets, haiku, or limericks.

- It shows appreciation for rhyme, meter, imagery, and other poetic devices.

- The quality of poetic output varies, with simpler forms often being more successful than complex poetic structures.

- Dialogue and Script Writing:

- The model can create conversational exchanges between characters, including script-style formatting.

- It demonstrates ability to maintain consistent character voices and advance narratives through dialogue.

- Example capabilities include writing screenplay scenes, creating hypothetical conversations, or generating interview scripts.

- Creative Non-fiction:

- Grok can produce essay-style explorations of topics from creative perspectives.

- It shows ability to blend informational content with engaging narrative approaches.

- Example capabilities include generating travel narratives, reflective essays, or feature article-style content.

Professional Content Creation:

Beyond purely creative writing, Grok can generate various forms of professional content:

- Marketing and Promotional Copy:

- The model can create advertising copy, product descriptions, marketing emails, and similar content.

- It demonstrates understanding of persuasive techniques, audience targeting, and brand voice.

- Example capabilities include generating taglines, creating product launch announcements, or writing promotional blog posts.

- Educational Content:

- Grok can produce instructional materials, explanatory texts, and learning resources.

- It shows ability to adapt explanations for different knowledge levels and learning contexts.

- Example capabilities include creating lesson plans, educational scripts, or explanatory guides.

- Business Communications:

- The model can generate professional emails, reports, proposals, and other business documents.

- It demonstrates understanding of business communication norms and professional language.

- Example capabilities include drafting business correspondence, creating meeting agendas, or writing executive summaries.

- Technical Writing:

- Grok can produce documentation, technical guides, and process descriptions.

- It shows ability to present complex information clearly and systematically.

- Example capabilities include creating user guides, API documentation, or technical specifications.

Specialized Creative Generation:

Grok also demonstrates creative generation capabilities in more specialized domains:

- Code Generation:

- The model can create original code snippets, scripts, and programs across multiple programming languages.

- It demonstrates understanding of programming concepts, syntax, and best practices.

- Example capabilities include generating functions for specific tasks, creating data processing scripts, or building simple applications.

- Game and Interactive Content:

- Grok can create content for games and interactive experiences, such as game scenarios, puzzles, or interactive fiction.

- It shows understanding of game mechanics, player engagement, and interactive storytelling.

- Example capabilities include designing riddles, creating text-based adventure scenarios, or developing game concepts.

- Adaptation and Transformation:

- The model can transform content between styles, formats, or tones.

- It demonstrates ability to maintain core content while adapting presentational elements.

- Example capabilities include rewriting technical content for non-technical audiences, adapting prose to different periods or styles, or converting concepts between media formats.

Creative Process Capabilities:

Beyond generating content, Grok shows capabilities related to the creative process itself:

- Ideation and Brainstorming:

- The model can generate multiple creative ideas around a theme or concept.

- It demonstrates divergent thinking and the ability to explore different conceptual directions.

- Example capabilities include brainstorming product names, generating story concepts, or proposing creative solutions to problems.

- Iteration and Refinement:

- Grok can revise and refine creative content based on feedback or direction.

- It shows ability to maintain core elements while making specified improvements.

- Example capabilities include editing draft content, enhancing specific aspects of created work, or addressing stylistic issues.

- Style Adaptation:

- The model can generate content in specific creative styles, mimicking genres or famous creators.

- It demonstrates understanding of the distinctive elements that characterize different creative styles.

- Example capabilities include writing in the style of specific authors, creating content in particular historical periods, or mimicking genre conventions.

Limitations and Considerations:

While Grok's creative generation capabilities are impressive, they do have limitations:

- Originality Boundaries:

- Like all current AI systems, Grok's creative output ultimately derives from patterns in its training data.

- While it can combine elements in novel ways, its fundamental creative building blocks come from existing human-created content.

- Quality Variation:

- The quality of creative output can vary significantly depending on the complexity of the request and the specific creative domain.

- Simpler creative forms typically yield more consistently successful results than highly sophisticated creative formats.

- Cultural and Contextual Limitations:

- Grok's creative generation may reflect biases or limitations in its training data, particularly regarding cultural contexts beyond dominant Western traditions.

- The model may have uneven capabilities across different cultural references, historical periods, or specialized creative domains.

- Ethical Boundaries:

- Grok maintains ethical boundaries in its creative generation, refusing to create certain types of content deemed harmful.

- This includes limitations on generating graphically violent content, sexually explicit material, or content promoting harmful activities.

Despite these limitations, Grok's creative generation capabilities represent a powerful tool for content creation, ideation, and creative exploration across a wide range of applications and domains.

Unique Features

"Rebellious" Nature

One of Grok AI's most distinctive characteristics is what xAI describes as its "rebellious" nature—a deliberate design choice that sets the model apart from many competitors in the AI assistant space. This characteristic manifests in several important ways and reflects xAI's broader philosophy regarding AI development and interaction.

Philosophical Foundation:

The "rebellious" nature of Grok stems from Elon Musk's stated concerns about what he perceives as excessive caution and potential political bias in other AI systems. xAI positioned Grok explicitly as an alternative approach, designed to:

- Challenge perceived orthodoxy: Offer responses that might explore ideas or perspectives that other AI systems might be more hesitant to engage with.

- Prioritize "maximum truth-seeking": Emphasize providing information and perspectives even on controversial or sensitive topics, with less restriction than some competing models.

- Balance safety with openness: Maintain basic safety guardrails while allowing more conversational freedom than some alternatives.

- Embrace humor and personality: Present information with a more casual, sometimes witty conversational style rather than a strictly neutral tone.

Manifestations in Interaction:

Grok's "rebellious" nature manifests in several observable ways during interactions:

- Conversational Style:

- Grok often adopts a more casual, colloquial tone than many other AI assistants.

- The model may use humor, informal language, and conversational flourishes more frequently.

- This creates a more personable interaction experience that some users find more engaging.

- Topic Engagement:

- Grok is generally more willing to engage with controversial or sensitive topics, though still maintaining basic safety boundaries.

- The model attempts to provide balanced perspectives on divisive issues rather than declining to address them.

- This approach aims to provide more informative responses on complex social and political topics.

- Humor Integration:

- Grok may incorporate humor, wit, or playful responses where appropriate.

- The model sometimes includes jokes, puns, or lighthearted remarks in its responses.

- This humor element contributes to Grok's distinctive personality and interaction style.

- Direct Communication:

- Grok tends toward straightforward communication with less hedging or qualification than some alternatives.

- The model aims to provide clear answers rather than overly cautious responses.

- This directness is particularly noticeable on topics where other AI systems might be more reserved.

- Creative Expression:

- Grok may exhibit more creative freedom in generating content, stories, or hypothetical scenarios.

- The model appears designed to balance creative expression with appropriate boundaries.

- This enables more engaging responses to creative requests while still maintaining responsible limits.

Ethical Boundaries and Safety:

Despite its "rebellious" positioning, Grok still maintains important ethical boundaries:

- Core Safety Guardrails:

- Grok will not assist with clearly illegal activities, provide instructions for causing harm, or generate explicitly harmful content.

- The model maintains limits around generating dangerous instructions, explicitly hateful content, or graphic violent or sexual material.

- These fundamental safety measures remain in place regardless of the "rebellious" positioning.

- Balanced Approach to Controversial Topics:

- When addressing controversial issues, Grok attempts to present multiple perspectives rather than avoiding the topic.

- The model aims to acknowledge complexity and nuance rather than presenting simplified or one-sided views.

- This approach seeks to provide informative responses while avoiding harmful biases.

- Transparency About Limitations:

- Grok will generally acknowledge when a request pushes against its ethical boundaries rather than subtly redirecting.

- The model typically explains its limitations explicitly when declining certain requests.

- This transparency aligns with the "rebellious" ethos of direct communication.

Strategic Positioning:

The "rebellious" nature of Grok represents a deliberate strategic positioning in the AI assistant market:

- Differentiation Strategy:

- This characteristic helps differentiate Grok from competitors in an increasingly crowded AI assistant landscape.

- It appeals to users who may find other AI systems overly cautious or restrictive.

- This positioning aligns with Elon Musk's public brand and appeals to his existing audience.

- Response to Perceived Market Gap:

- xAI identified what they saw as an underserved segment of users seeking more direct engagement with complex topics.

- Grok's approach attempts to fill this perceived gap while maintaining responsible AI practices.

- This positions the model as an alternative rather than just another entry in the AI assistant category.

- Alignment with Brand Identity:

- The "rebellious" nature connects with the broader brand identity of xAI and its association with Elon Musk.

- This consistency creates a coherent brand story around challenging conventions and pursuing unconventional approaches.

- The positioning reinforces Musk's public stance on free speech and anti-censorship principles.

While the "rebellious" characterization sometimes creates expectations of dramatic differences from other AI systems, in practice, the distinctions are often more subtle than revolutionary. Grok maintains many of the same fundamental ethical boundaries as other responsible AI systems while adopting a somewhat different approach to tone, engagement with controversial topics, and conversational style. This characteristic represents an important differentiator in Grok's identity while functioning within the broader context of responsible AI development.

Internet Access

Grok AI's internet access capability represents one of its most significant technical differentiators, allowing it to overcome the knowledge cutoff limitation that affects many large language models. This feature enables Grok to provide more current, comprehensive, and accurate information by directly accessing and processing web content in real-time.

Technical Implementation:

Grok's internet access capability is implemented through a sophisticated system that includes:

- Integrated Web Browsing:

- Unlike some models that require plugins or extensions, Grok has web browsing capabilities built directly into its core functionality.

- This integration creates a more seamless experience when accessing current information.

- The system includes mechanisms for determining when web access is appropriate and beneficial for answering a query.

- Dynamic Query Generation:

- Grok analyzes user questions to formulate effective search queries.

- The model can generate multiple search queries for complex questions or refine queries based on initial results.

- This dynamic approach helps ensure relevant information is located even for nuanced or multi-faceted questions.

- Content Extraction and Processing:

- Beyond simple search results, Grok can access full web pages to extract detailed information.

- The model processes page content to identify relevant sections, data points, and context.

- This extraction capability allows for more comprehensive answers than would be possible from search snippets alone.

- Information Synthesis:

- Grok integrates information from multiple web sources with its existing knowledge.

- The model attempts to resolve contradictions or inconsistencies across sources.

- This synthesis process aims to provide coherent, accurate responses drawing on both real-time and pre-trained information.

Functional Workflow:

When a user asks a question that might benefit from current information, Grok follows a workflow:

- Query Analysis:

- Grok evaluates whether the question requires current information, references recent events, or concerns rapidly changing topics.

- Based on this analysis, the model decides whether to rely on its internal knowledge or activate its web browsing capability.

- Web Search Execution:

- If web access is needed, Grok formulates and executes search queries.

- The model retrieves search results that appear relevant to the user's question.

- These initial results provide an overview of available information sources.

- Source Selection and Access:

- Grok evaluates search results to identify the most promising sources.

- The model can then access full web pages from these sources to gather more detailed information.

- This selection process considers factors like source credibility, relevance, and information completeness.

- Information Extraction:

- From accessed web pages, Grok extracts the specific information relevant to the user's query.

- The model navigates page content to locate key facts, explanations, or data points.

- This extraction process filters out extraneous content to focus on pertinent information.

- Response Formulation:

- Grok synthesizes the extracted information into a coherent response.

- The model integrates this web-sourced content with its existing knowledge.

- The final response often includes attribution to sources when providing specific facts or claims.

Strategic Advantages:

Grok's internet access provides several strategic advantages over models limited to their training data:

- Information Currency:

- Grok can provide information about events that occurred after its training data cutoff.

- This allows the model to discuss recent developments, current events, and emerging trends.

- Users receive up-to-date answers rather than information that might be months or years out of date.

- Factual Accuracy:

- Access to current sources improves the accuracy of responses about rapidly changing topics.

- The model can verify or update information that may have changed since its training.

- This reduces the risk of providing outdated or superseded information.

- Information Breadth:

- Web access effectively expands Grok's knowledge domain beyond what was covered in its training data.

- The model can provide information on niche or specialized topics by accessing relevant websites.

- This breadth makes Grok more versatile across diverse subject areas.

- Source Transparency:

- Grok can provide attribution for information drawn from external sources.

- This allows users to assess the credibility of information or explore topics further.

- The transparency supports more informed decision-making based on Grok's responses.

Implementation Challenges:

Despite its advantages, Grok's internet access feature entails several implementation challenges:

- Source Reliability Assessment:

- The quality of responses depends partly on Grok's ability to assess source credibility.

- This assessment is complex and imperfect, potentially leading to inclusion of information from less reliable sources.

- The model must balance accessing current information with ensuring source quality.

- Information Synthesis Complexity:

- Integrating information from multiple sources, potentially with contradictions, is technically challenging.

- The model must resolve inconsistencies and present a coherent synthesis.

- This process becomes particularly complex for controversial or rapidly evolving topics.

- Processing Efficiency:

- Web access introduces additional processing time compared to responses from internal knowledge.

- The model must balance comprehensive information gathering with reasonable response times.

- This balance affects the user experience, particularly for complex queries requiring multiple searches.

- Access Limitations:

- Grok cannot access content behind paywalls, login requirements, or other access controls.

- Some valuable information sources remain inaccessible to the model's web browsing capability.

- This creates uneven coverage across different information domains.

- Privacy and Data Considerations:

- Web browsing raises questions about how user queries might be exposed to search engines.

- The implementation must balance effective information retrieval with appropriate privacy protections.

- This requires careful system design to manage data flows and search execution.

Grok's internet access capability represents a significant advancement in AI assistant functionality, addressing a fundamental limitation of traditional LLMs while creating new possibilities for providing current, comprehensive information. This feature exemplifies xAI's approach to developing AI systems that can access and process real-time information rather than being limited to knowledge from their training data.

UI/UX Design

Grok AI's user interface and user experience (UI/UX) design represents an important aspect of its implementation, particularly as it appears on the X platform (formerly Twitter). The design choices reflect xAI's philosophy for AI interaction while addressing practical considerations for effective human-AI collaboration.

Interface Integration with X Platform:

Grok is most prominently featured as an integrated service within the X platform:

- Native Integration:

- Grok appears as a built-in feature for X Premium+ subscribers rather than a separate application.

- This integration creates a seamless experience within the X ecosystem.

- Users can access Grok directly from the X interface without switching contexts or applications.

- Dedicated Tab/Section:

- Within the X interface, Grok has a dedicated access point, typically represented by an icon in the navigation.

- This dedicated space creates a clear separation between standard X content and Grok interactions.

- The design maintains consistency with X's overall aesthetic while establishing Grok as a distinct service.

- Branded Identity:

- Grok maintains a consistent visual identity that aligns with both xAI's branding and the X platform's design language.

- This branding helps establish Grok as a distinctive product while fitting into the broader ecosystem.

- Visual elements like icons, color schemes, and typography create recognition and consistency.

Conversation Interface Design:

The core of Grok's user experience is its conversation interface:

- Chat-Based Interaction:

- Grok uses a familiar chat interface with user messages and AI responses in a conversational format.

- This design leverages users' existing familiarity with messaging interfaces.

- The conversation history remains visible, allowing users to reference earlier exchanges.

- Message Styling:

- User messages and Grok's responses have distinct visual treatments to clearly differentiate them.

- Grok's responses may include formatting elements like bold text, lists, or code blocks to improve readability.

- This styling enhances the clarity of complex or structured information.

- Input Mechanism:

- Users interact with Grok primarily through a text input field, similar to standard messaging interfaces.

- The input area may include prompts or placeholder text to guide users on how to interact with Grok.

- This familiar design reduces the learning curve for new users.

- Response Presentation:

- Grok's responses appear in a conversational format but may include rich formatting when appropriate.

- For complex information, the interface likely supports structured elements like tables, code blocks, or emphasized text.

- These formatting capabilities allow for more effective communication of diverse content types.

Interaction Model:

Grok's interaction design emphasizes several key principles:

- Conversational Continuity:

- The interface maintains conversation history and context across multiple exchanges.

- This continuity allows for natural follow-up questions and extended discussions.

- The design supports reference to earlier parts of the conversation for clarity and context.

- Response Timing Indicators:

- When Grok is processing complex queries or accessing web information, the interface likely provides visual indicators of this process.

- These indicators help set user expectations about response timing.

- This transparency improves the experience when responses require additional processing time.

- Feedback Mechanisms:

- The interface probably includes mechanisms for users to provide feedback on Grok's responses.

- This feedback might include options to rate responses or report problematic content.

- These mechanisms support both user satisfaction and continuous improvement of the system.

- Recovery Paths:

- The design likely includes approaches for recovering from misunderstandings or unclear queries.

- These might include suggested reformulations, clarifying questions, or options to restart a conversation.

- These recovery paths help prevent frustration when communication challenges arise.

Distinctive UX Elements:

Several UX elements likely differentiate Grok's implementation:

- Personality Expression:

- The interface design probably supports Grok's "rebellious" personality through tone, language, and possibly visual elements.

- This personality-driven design creates a more engaging and distinctive experience.

- The expressions of personality are balanced with professionalism and helpfulness.

- Web Browsing Transparency:

- When Grok accesses the internet for information, the interface likely provides some indication of this process.

- This transparency helps users understand when responses are based on real-time information versus Grok's internal knowledge.

- The design might include source citations or references when presenting web-sourced information.

- Multimodal Support:

- With the introduction of Grok-1.5V, the interface has likely been enhanced to support image inputs alongside text.

- This multimodal capability allows users to share images for Grok to analyze or comment on.

- The design likely accommodates both text-only and image+text interactions within the same conversation flow.

- Progressive Disclosure:

- For complex or lengthy responses, the interface might implement progressive disclosure techniques.

- These could include expandable sections, "read more" options, or collapsible elements.

- This approach helps manage information density and cognitive load, particularly on mobile devices.

Platform Adaptations:

Grok's interface likely adapts to different contexts within the X ecosystem:

- Mobile Optimization:

- Given X's significant mobile usage, Grok's interface is certainly optimized for smaller screens.

- This includes appropriate text sizing, touch targets, and conversation layouts for mobile devices.

- The mobile experience likely maintains the core functionality while adapting to screen constraints.

- Desktop Enhancements:

- On larger screens, the interface probably takes advantage of additional space for enhanced formatting.

- This might include side-by-side layouts, more extensive formatting options, or additional controls.

- These enhancements improve the experience while maintaining consistency across platforms.

- Accessibility Considerations:

- The interface likely implements accessibility features consistent with X's overall approach.

- These features would include screen reader compatibility, keyboard navigation, and appropriate contrast ratios.

- These considerations ensure Grok is usable by people with diverse abilities and needs.

While specific details of Grok's UI/UX design may evolve over time, these elements represent the likely approach based on xAI's stated philosophy and the integration within the X platform. The design balances conversational naturalness with effective information presentation, creating a distinctive experience that aligns with Grok's overall positioning in the AI assistant market.

Contextual Understanding

Grok AI demonstrates sophisticated contextual understanding capabilities that allow it to maintain coherent, relevant conversations across multiple exchanges. This ability to track and utilize context is fundamental to Grok's effectiveness as a conversational AI assistant.

Conversation Context Maintenance:

Grok maintains awareness of conversation history in several important ways:

- Reference Resolution:

- The model can resolve pronouns and other references to previously mentioned entities or concepts.

- For example, if a user asks about "its climate" after discussing a specific country, Grok correctly interprets what "it" refers to.

- This capability extends to complex reference patterns across multiple turns of conversation.

- Topic Tracking:

- Grok maintains awareness of the current conversation topic or topics, allowing for coherent discussion flow.

- The model recognizes when subtopics emerge and can navigate between related subjects while maintaining overall coherence.

- This tracking allows Grok to provide responses that build upon previously established information rather than treating each query in isolation.

- Information Persistence:

- Details shared earlier in a conversation remain available for later reference and use.

- This includes facts, preferences, specifications, or constraints mentioned by the user.

- This persistence allows for progressive refinement of topics or ideas across multiple exchanges.

- Conversational Arc Awareness:

- Grok demonstrates awareness of the overall progression of a conversation, including its phase and purpose.

- The model can adapt its responses based on whether a conversation is in an exploratory, problem-solving, or concluding phase.

- This awareness helps Grok provide appropriately structured responses at different stages of an interaction.

Multi-turn Reasoning: