California's Pioneering AI Legislation: Shaping the Future of Artificial Intelligence

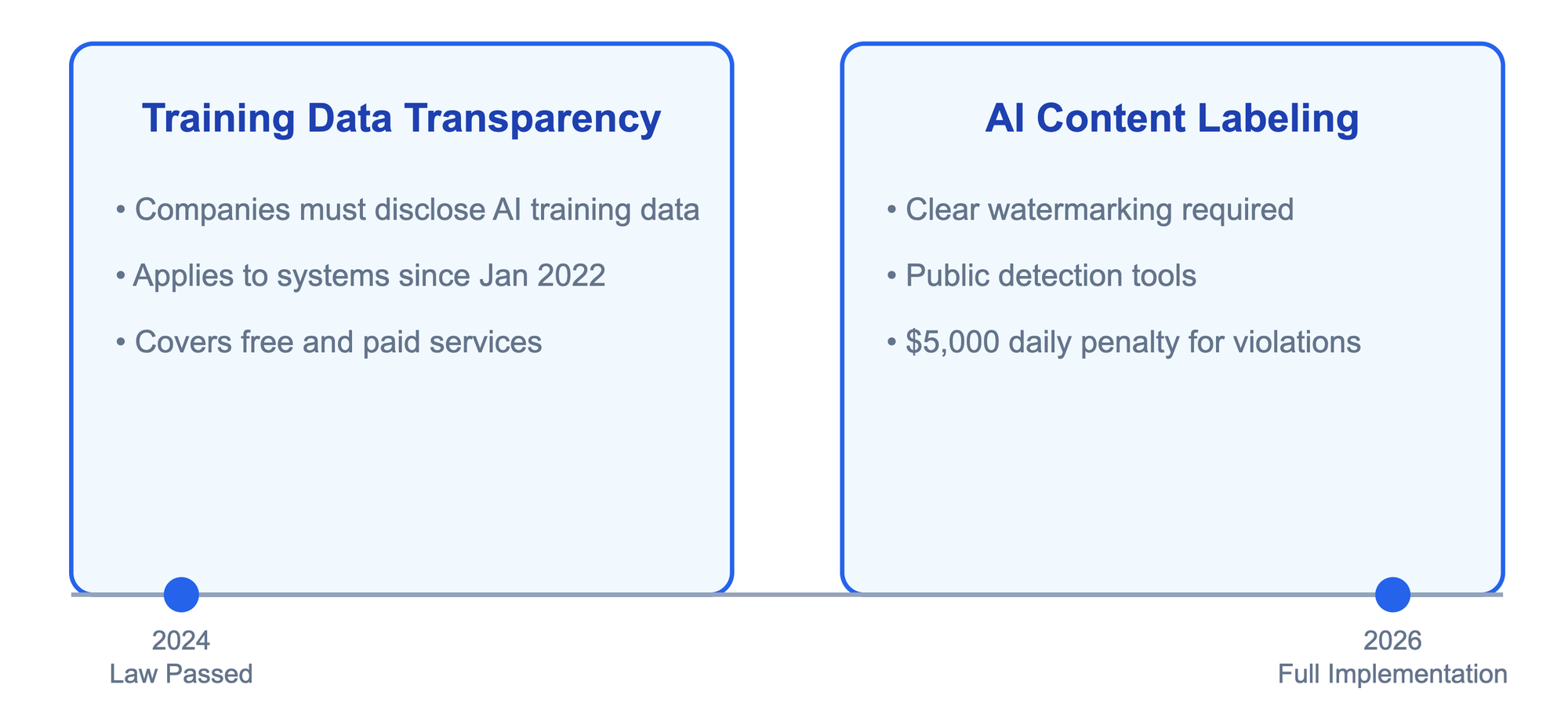

California has passed revolutionary legislation to regulate artificial intelligence, requiring companies to disclose training data and label AI-generated content. Starting 2026, these laws will transform how AI companies operate and how consumers interact with AI-generated materials.

California has just passed groundbreaking laws to make artificial intelligence (AI) more transparent and accountable. Starting January 1, 2026, companies developing AI systems will need to follow new rules designed to protect consumers and ensure responsible AI development.

The new legislation, signed by Governor Gavin Newsom, consists of two main laws - Assembly Bill 2013 (AB 2013) and Senate Bill 942 (SB 942) that will change how AI companies operate in California.

Assembly Bill 2013 (AB 2013) : The Generative AI Training Data Transparency Act

Requires AI companies to be open about the data they use to train their AI systems. This means companies must explain what information they used to teach their AI to generate text, images, videos, or audio.

Think of it like reading the ingredients list on food packaging – just as consumers want to know what goes into their food, they'll now be able to know what data goes into the AI systems they use. This transparency requirement applies to both free and paid AI services, including those released or significantly changed since January 1, 2022.

Senate Bill 942 (SB 942): The California AI Transparency Act

Focuses on making AI-generated content easily identifiable. Companies must add clear labels or "watermarks" to content created by AI, helping people distinguish between human-created and AI-generated material. It's similar to how products carry labels indicating they're "Made in USA" or "Organic" – now, content will carry markers showing it was "Made by AI."

To help people verify AI-created content, the law requires the development of public tools that can detect AI-generated materials. These tools will be freely available to anyone who wants to check whether something they're looking at was created by AI.

The laws come with serious enforcement measures. Companies that don't comply could face penalties of up to $5,000 per day. The California Attorney General and local authorities will have the power to enforce these rules.

For everyday Californians, these laws mean greater protection against misinformation and more control over their digital experiences. When scrolling through social media or browsing websites, people will be able to easily tell whether they're looking at content created by humans or AI.

While these rules might make it harder for smaller AI companies to compete, supporters argue that building trust in AI technology is crucial for its long-term success. The laws aim to foster innovation while ensuring AI development remains responsible and transparent.

California's approach could influence how other states and countries regulate AI. As home to many leading technology companies, California's standards often become informal national benchmarks. These laws might serve as a model for future AI regulations across the United States and beyond.

Companies have until 2026 to prepare for these changes, giving them time to adjust their practices and implement the required transparency measures. This timeline acknowledges that significant changes will be needed in how AI companies operate, while ensuring the protection of consumer interests isn't delayed indefinitely.

As artificial intelligence becomes more integrated into our daily lives, these laws represent an important step toward ensuring that AI enhances rather than undermines public trust. They set a clear direction for the future: one where powerful AI technology develops alongside strong protections for public interests.