A Comparative Analysis of Anthropic's Model Context Protocol and Google's Agent-to-Agent Protocol

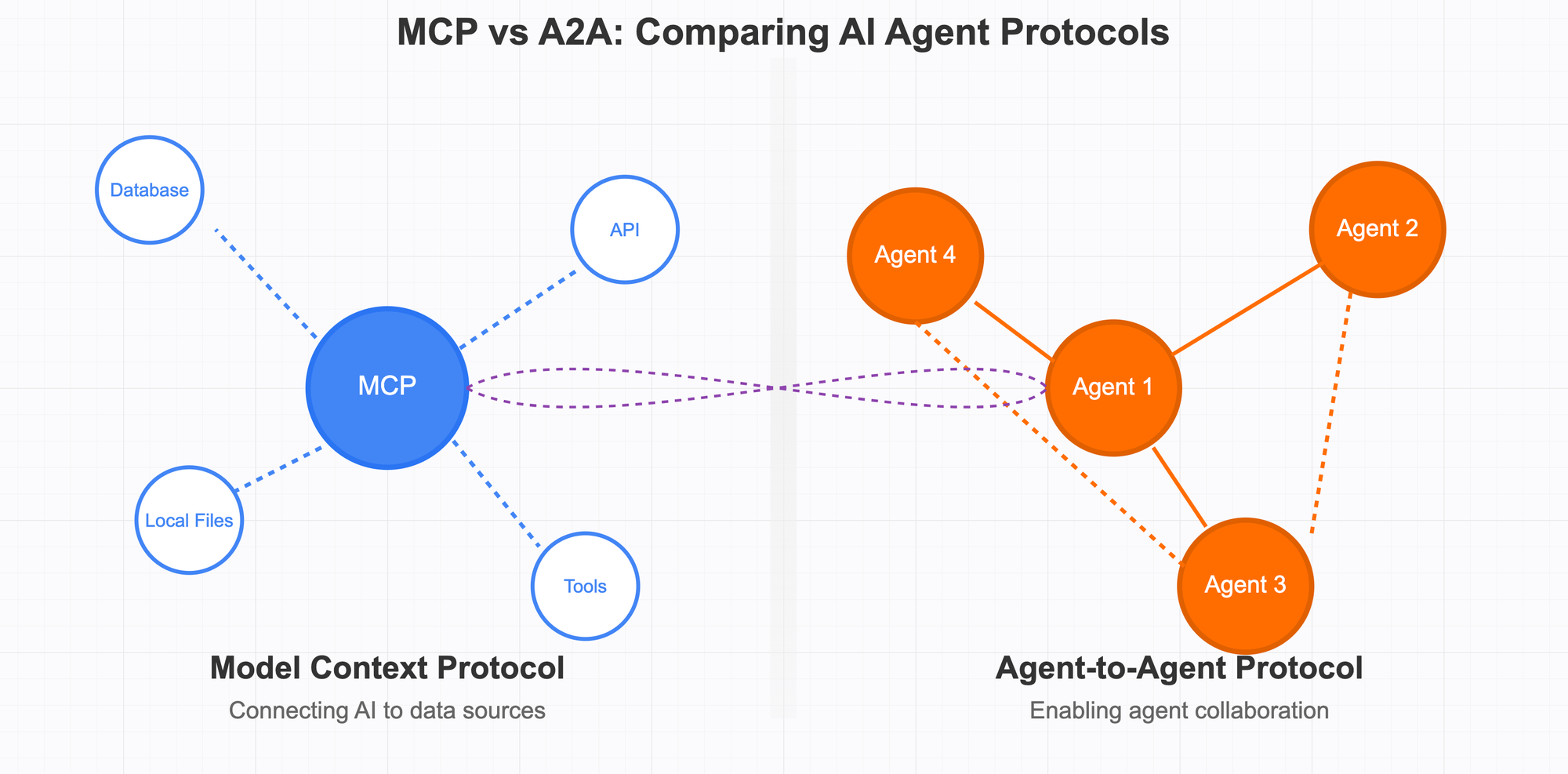

As AI agents transform enterprise technology, two critical protocols are emerging as industry standards: Anthropic's MCP for connecting AI to data sources and Google's A2A for agent collaboration. This analysis breaks down how these frameworks will define the future of integrated AI systems.

1. Introduction

The rapid evolution and increasing prevalence of AI agents are driving a need for standardized protocols that facilitate their seamless integration and interoperability. In this dynamic landscape, two prominent protocols have emerged: Anthropic's Model Context Protocol (MCP) and Google's Agent-to-Agent Protocol (A2A). Each protocol addresses distinct, yet potentially overlapping, facets of the burgeoning AI agent ecosystem.

This report undertakes a comprehensive analysis of both MCP and A2A, meticulously examining their respective purposes, functionalities, technical specifications, intended use cases, benefits, and limitations. Furthermore, the report will draw a detailed comparison between these protocols, aiming to provide valuable insights into their individual strengths, weaknesses, and the potential for synergy in shaping the trajectory of AI agent development and deployment.

2. Anthropic's Model Context Protocol (MCP)

2.1. Purpose and Core Concepts

Anthropic's Model Context Protocol (MCP), introduced in late November 2024, is an open standard designed to tackle the challenge of fragmented integrations that have historically plagued the connection between AI models and external data repositories, business tools, and development environments. The primary aim of MCP is to enable frontier AI models to generate more relevant and higher-quality responses by providing them with a standardized and efficient way to access the data they require. It functions as a universal and open conduit for linking AI systems with diverse data sources, often likened to a "USB port" for AI applications, offering a consistent interface that eliminates the need for custom coding for each new data source or service. The fundamental objective of MCP is to replace the current landscape of disparate, ad-hoc integrations with a unified protocol, thereby simplifying the development process and significantly enhancing the scalability of AI-powered systems that rely on external information.

The introduction of MCP underscores a critical impediment in the advancement of artificial intelligence: the inherent difficulty in establishing effective connections between highly capable AI models and the vast amounts of real-world data necessary for their optimal performance. Even the most sophisticated models are often confined by their isolation from relevant data, residing behind information silos and within legacy systems. The prevalent approach of developing custom integrations for each new data source has proven to be a significant obstacle to scaling truly connected AI systems. MCP directly confronts this challenge by offering a common and open language that standardizes how AI systems access and interact with data, representing a significant step towards making AI more contextually aware and practically applicable across various domains.

2.2. Functionality and Architecture

MCP operates on a client-server architectural model. This architecture comprises three primary components: the MCP Host, the MCP Client, and the MCP Server. The MCP Host is the AI-powered application or agent environment, such as the Claude Desktop application or an integrated development environment (IDE) plugin, that serves as the primary interface for user interaction. Notably, an MCP Host can establish connections with multiple MCP Servers concurrently.

The MCP Client acts as an intermediary component residing within the host application. Its role is to manage the connection to a single, specific MCP Server, thereby ensuring a degree of isolation and enhancing security. For each MCP Server it needs to interact with, the host application spawns a dedicated MCP Client, maintaining a one-to-one relationship between the client and the server.

The MCP Server is typically an external program that implements the MCP standard. Its primary function is to provide access to a specific set of capabilities, which often include a collection of tools, access to various data resources, and predefined prompts tailored to a particular domain. An MCP Server might interface with a diverse range of data sources, such as a database, a cloud service, or virtually any other system containing relevant information.

This deliberate separation of responsibilities within the MCP architecture fosters a high degree of modularity and facilitates scalability in AI application development. By isolating the logic for data access within dedicated servers, developers of AI applications can concentrate their efforts on refining the user interface and enhancing the core AI functionalities. Similarly, providers of data can focus on securely exposing their information through MCP Servers without requiring an in-depth understanding of every specific AI model that might potentially connect to their systems. This division of labor streamlines the development lifecycle and encourages a more specialized approach to building connected AI solutions.

2.3. Technical Specifications

The communication backbone of MCP relies on JSON-RPC 2.0 messages, a lightweight and widely adopted remote procedure call protocol that facilitates structured data exchange between the client and the server. MCP defines a set of fundamental message types, known as "primitives," that govern the interactions between the client and the server. These primitives are categorized as either server-side or client-side.

On the server side, three primary primitives are defined:

- Resources: These represent structured data that the server can provide to the client, which in turn enriches the context available to the AI model. Examples include document snippets, fragments of code, or any other form of information that can be included in the model's prompt.

- Tools: These are executable functions or actions that the AI model can instruct the server to invoke. Examples might include executing a query against a database, performing a search on the web, or posting a message to a communication platform.

- Prompts: These are pre-prepared instructions or templates that the server can offer to guide the AI model in performing specific tasks. They are akin to stored prompts or macros that can be used to direct the model's behavior.

On the client side, two key primitives are defined:

- Roots: These represent entry points into the host application's file system or environment that the server might be granted access to, subject to user permissions. For instance, this could allow a server to access specific local files if explicitly authorized by the user.

- Sampling: This is a more advanced primitive that enables the server to request the host AI model to generate a completion based on a provided prompt. This feature allows for more complex, multi-step reasoning processes, where a server-side agent could potentially call back to the model for sub-tasks. Anthropic emphasizes that any use of the Sampling primitive should always require explicit human approval to prevent unintended or runaway self-prompting.

Communication between MCP Clients and Servers can occur through various methods, including standard input/output (stdio) when both components are running on the same machine, which is particularly useful for local integrations. For remote or networked connections, MCP leverages HTTP-based protocols, with Server-Sent Events (SSE) planned or implemented for efficient streaming of data.

A fundamental aspect of MCP's design is its strong emphasis on security and user consent. The protocol mandates explicit user authorization for any data access initiated by a server and for the execution of any tools. This ensures that users maintain control over what information is shared and what actions are taken by AI systems interacting through MCP.

The technical specifications of MCP, particularly the use of JSON-RPC and the clearly defined primitives, establish a structured and standardized framework for interaction between AI models and external systems. The inclusion of the "Sampling" primitive suggests a forward-looking approach towards enabling more sophisticated agentic behaviors. Furthermore, the significant focus on security underscores the critical importance of responsible data handling and controlled execution in AI applications.

2.4. Intended Use Cases and Applications

The versatility of MCP lends itself to a wide array of use cases and applications, particularly in scenarios where AI needs to interact with existing data and systems. One prominent application is in the development of enterprise data assistants, which can securely access a company's internal data, documents, and services to answer employee queries or automate specific tasks. Imagine a corporate chatbot capable of seamlessly querying multiple internal systems, such as HR databases, project management tools, and communication platforms, all through standardized MCP connectors.

MCP also plays a crucial role in AI-powered coding assistants that integrate with IDEs. These integrations can leverage MCP to access extensive codebases and documentation, providing developers with more accurate code suggestions and deeper insights. Similarly, MCP simplifies the process of connecting AI models to databases, streamlining data analysis and reporting workflows for AI-driven data querying tools.

Desktop AI applications can also benefit significantly from MCP, enabling them to securely access local files, applications, and services on a user's computer. This enhances their ability to provide contextually relevant responses and perform tasks based on local information. Furthermore, MCP can facilitate the automation of various tasks, such as data extraction from websites and web searches, by allowing AI agents to access specialized tools.

The protocol also supports applications requiring real-time data processing and interaction with sensors, opening up possibilities for AI in dynamic environments. Complex workflows that involve coordinating multiple tools, such as file systems and version control systems, can also be managed effectively through MCP. To expedite adoption, pre-built MCP servers are either available or under development for a range of popular enterprise platforms, including Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer.

The diverse range of intended use cases highlights MCP's potential to serve as a foundational technology for a more deeply integrated and context-aware AI ecosystem, particularly within enterprise settings and on individual user devices. The emphasis on secure and standardized data access makes it a valuable tool for organizations looking to leverage AI in a responsible and scalable manner.

2.5. Benefits

The adoption of Anthropic's Model Context Protocol offers several key benefits for developers and organizations seeking to integrate AI models with external systems. Primarily, MCP significantly simplifies the often complex process of integration between AI models and a multitude of external data sources and tools. By providing a standardized protocol, it eliminates the need for custom-built connectors for each unique combination of AI model and external system, streamlining development efforts and reducing the associated overhead.

Furthermore, MCP substantially enhances the context awareness of AI models by granting them access to real-time and relevant data from external sources. This capability allows AI systems to ground their responses and actions in the most up-to-date information, leading to more accurate and useful outcomes. The protocol also fosters the development of more autonomous and intelligent AI agents that can proactively access and utilize external resources to perform tasks on behalf of users. By streamlining data access, MCP contributes to improved efficiency in AI applications, enabling faster and more accurate responses.

MCP offers a standardized architecture for establishing connections between AI systems and data sources, which promotes greater interoperability across different platforms and vendors. Security and compliance are also strengthened through the controlled access mechanisms and the requirement for explicit user consent before data is accessed or tools are executed. The protocol's design facilitates the creation of composable integrations and workflows, allowing developers to build more complex and adaptable AI solutions. Ultimately, by standardizing the integration process, MCP helps to reduce both the initial development time and the ongoing maintenance costs associated with connecting AI to the external world.

These benefits collectively suggest a transformative potential for MCP in enabling a more deeply integrated and capable AI landscape. The emphasis on standardization, context awareness, and security points towards a future where AI systems can interact with the digital world in a more seamless, reliable, and responsible manner.

2.6. Limitations and Challenges

Despite the significant advantages offered by MCP, several limitations and challenges warrant consideration. One potential concern revolves around the stateful communication requirement between clients and servers, which might introduce complexities in terms of scalability and resource management, particularly in high-demand environments. Furthermore, when integrating a large number of tools via separate MCP connections, there is a risk of overwhelming the context window of the underlying large language model (LLM), potentially impacting performance and the accuracy of tool recommendations.

Another aspect to consider is the indirect nature of the interaction between the LLM and external tools within the MCP framework. The LLM generates structured outputs specifying the tool and its parameters, which are then executed by the MCP client, with the results being passed back to the LLM. This indirect interaction, while providing a layer of abstraction and control, might introduce additional complexity in certain scenarios. Integrating with existing tools that primarily utilize stateless REST APIs could also present challenges, potentially requiring the development of intermediary layers to manage session state and translate communication styles to align with MCP's stateful model.

The error handling within MCP is largely defined by each individual API provider, meaning that a standardized error-handling framework is not enforced by the protocol itself. This lack of uniformity could potentially lead to inconsistencies in how errors are reported and managed across different MCP integrations. While MCP offers strong support for local connections, its current design might present barriers for large-scale enterprise deployments in cloud-native environments where high-throughput operations are essential. Some feedback has also indicated that the existing documentation for MCP can be overly focused on implementation details, which might make it more difficult for teams to quickly grasp the broader benefits and high-level concepts of the protocol. As an open-source project, MCP also faces the inherent risk of potential fragmentation if competing standards emerge or if achieving consensus on future protocol updates proves challenging.

These limitations highlight areas where further development and refinement of the MCP protocol, as well as careful consideration during implementation, will be crucial for its continued success and widespread adoption. Addressing the challenges related to state management, context window size, and integration with existing systems will be key to unlocking the full potential of MCP in diverse deployment scenarios.

3. Google's Agent-to-Agent Protocol (A2A)

3.1. Purpose and Core Concepts

Google's Agent-to-Agent Protocol (A2A) is an open standard specifically designed to facilitate seamless communication and robust interoperability between diverse AI agents. A key objective of A2A is to enable agents built using different frameworks or by various vendors to effectively collaborate and work together, providing them with a common language for interaction. Google has explicitly positioned A2A as a complementary protocol to Anthropic's Model Context Protocol (MCP). In this complementary model, MCP primarily focuses on equipping individual AI agents with the necessary tools and contextual information to perform their tasks, while A2A addresses the critical need for these agents to communicate and coordinate their actions with other autonomous agents.

The introduction of A2A reflects a growing understanding within the AI community regarding the importance of collaboration among autonomous AI entities to tackle increasingly complex challenges. This development signifies a broader trend towards the creation of more sophisticated, multi-agent systems capable of distributed problem-solving. Google's strategic positioning of A2A as a companion to MCP indicates a recognition that both the capabilities of individual agents (enhanced by protocols like MCP) and the ability for these agents to interact and coordinate their efforts (facilitated by protocols like A2A) are essential components of a comprehensive and effective AI ecosystem. As AI agents become more integral to various aspects of our digital lives and business operations, the ability for them to communicate and collaborate seamlessly will be paramount for realizing their full potential in addressing complex, multi-faceted tasks.

3.2. Functionality and Architecture

The Agent-to-Agent (A2A) protocol is designed to facilitate communication between a "client" agent, which initiates a task, and one or more "remote" agents, which are responsible for acting upon those tasks. The protocol defines several key concepts that govern this interaction:

- Agent Card: This is a fundamental element of the A2A protocol, serving as a public metadata file, typically located at the well-known URL /.well-known/agent.json. The Agent Card provides a description of an agent's capabilities, the specific skills it possesses, its network endpoint URL, and any authentication requirements necessary to interact with it. Client agents utilize these Agent Cards for the crucial process of discovering other agents within the ecosystem.

- A2A Server: An A2A Server is essentially an AI agent that exposes an HTTP endpoint and implements the methods defined by the A2A protocol specification. Its primary responsibilities include receiving requests from client agents and managing the execution of the tasks contained within those requests.

- A2A Client: An A2A Client can be either a standalone application or another AI agent that consumes the services offered by A2A Servers. It initiates interactions by sending requests, such as tasks/send, to the URL of an A2A Server.

- Task: The Task is the central unit of work within the A2A protocol. A client agent initiates a task by sending a message using either the tasks/send method for immediate execution or the tasks/sendSubscribe method for tasks that might involve streaming updates. Each task is assigned a unique ID and progresses through a defined lifecycle, transitioning through various states such as submitted, working, input-required, completed, failed, and canceled.

- Message: A Message represents a single turn of communication between the client agent (typically with the role: "user") and the remote agent (with the role: "agent"). A message can contain one or more fundamental content units known as Parts.

- Part: A Part is the basic unit of content within either a Message or an Artifact. The protocol supports different types of Parts, including TextPart for plain text, FilePart which can contain inline bytes or a URI pointing to a file, and DataPart for structured JSON data, such as forms.

- Artifact: An Artifact represents the output or result generated by an agent during the execution of a task. Examples of artifacts include generated files, final structured data, or any other form of output produced by the agent. Similar to Messages, Artifacts also contain one or more Parts.

The fundamental architecture of A2A revolves around the concept of independent AI agents that can discover each other's capabilities and then delegate specific tasks based on those capabilities. The Agent Card mechanism plays a pivotal role in this discovery process, enabling agents to advertise their skills and the necessary protocols for interaction. The emphasis on tasks, messages, and artifacts provides a structured and well-defined framework for managing the communication and workflow between collaborating agents. This design allows for the creation of more complex and distributed AI systems where individual agents with specialized skills can work together to achieve overarching goals.

3.3. Technical Specifications

Communication within the A2A protocol primarily relies on the Hypertext Transfer Protocol (HTTP), a foundational protocol for data communication on the World Wide Web. The protocol specification itself is formally defined using JavaScript Object Notation (JSON), a lightweight data-interchange format that is widely used in web applications. For tasks that are expected to take a longer duration to complete, A2A incorporates support for Server-Sent Events (SSE), a technology that enables a server to push updates to a client over an HTTP connection, providing a mechanism for real-time streaming of task progress. Additionally, A2A offers the capability for servers to proactively send updates about the status of tasks to a client-provided webhook URL through push notifications. This feature allows clients to receive timely updates without needing to constantly poll the server.

A core design principle of A2A is to facilitate the secure exchange of information and the coordinated execution of actions between different AI agents. The protocol is also designed to be modality agnostic, meaning it supports various forms of communication beyond just text, including interactive forms and even bidirectional audio and video streaming, allowing for richer and more versatile interactions between agents and potentially with end-users. Notably, A2A is built upon existing and widely adopted web standards, such as HTTP, SSE, and JSON-RPC, which simplifies its integration with current technological infrastructures.

The technical specifications of A2A highlight its reliance on well-established and broadly supported web technologies for communication and data exchange. The inclusion of SSE and push notifications underscores the protocol's ability to handle both short and long-running tasks efficiently and to provide timely updates. The focus on secure communication and support for diverse modalities positions A2A as a robust framework for building sophisticated multi-agent systems, particularly within enterprise environments where these features are often critical.

3.4. Intended Use Cases and Applications

The Agent-to-Agent (A2A) protocol is specifically intended to enable a wide range of collaborative scenarios between autonomous AI agents. Its primary use cases revolve around facilitating direct communication between agents, ensuring the secure exchange of information, and coordinating actions across various tools, services, and enterprise systems. One key application area is in building sophisticated multi-agent systems designed to handle complex tasks that require the coordinated efforts of multiple specialized agents. A compelling example is in the domain of hiring, where a central hiring agent could leverage A2A to interact with other specialized agents responsible for tasks such as sourcing candidates, scheduling interviews, and conducting background checks.

A2A also aims to connect and streamline business operations across different departments within an organization. For instance, agents responsible for customer support, inventory management, and finance could communicate and coordinate their activities through A2A, leading to more integrated and automated business processes. The protocol can also be utilized to link different software applications together, enabling the creation of end-to-end automated workflows that span multiple systems. A significant advantage of A2A is its ability to foster collaboration between agents even if they were developed by different vendors or utilize different underlying frameworks. This interoperability is crucial for building heterogeneous AI ecosystems. Furthermore, A2A can be instrumental in creating more intelligent virtual assistants that function as integrated systems, capable of delegating sub-tasks to a network of specialized agents operating behind the scenes.

These intended use cases highlight A2A's strong focus on enabling collaborative AI within enterprise environments and beyond. By providing a standardized way for agents to discover, communicate, and coordinate with each other, A2A has the potential to unlock new levels of automation and efficiency for complex, multi-step processes.

3.5. Benefits

The Agent-to-Agent (A2A) protocol offers several compelling benefits for those looking to build and deploy multi-agent AI systems. Primarily, it enables seamless collaboration between autonomous AI agents, even if these agents are "opaque," meaning they don't need to expose their internal reasoning or memory states to interact. This is particularly important in enterprise settings where security and proprietary algorithms need to be protected. A2A also simplifies the integration of intelligent agents into existing enterprise applications, providing a straightforward method for leveraging agent capabilities across an organization's technology landscape. The protocol supports key enterprise requirements such as agent capability discovery, secure collaboration between agents, and efficient management of tasks and their states.

By enabling effective inter-agent communication, A2A increases the autonomy of AI agents and can significantly multiply productivity gains by allowing them to work together on complex problems. Furthermore, the standardization offered by A2A helps to reduce the long-term costs associated with building and maintaining custom integrations between different AI systems. A key advantage for users is the flexibility to combine agents from various providers, fostering a more open and competitive AI ecosystem. A2A also helps to break down data silos by allowing agents operating within one system to access and utilize information from other connected systems, promoting better information flow and more comprehensive solutions. The protocol is designed to support long-running tasks, providing real-time feedback, notifications, and state updates to users throughout the process, which is crucial for complex business operations. Finally, A2A is modality agnostic, capable of handling different data types beyond just text, including audio, video, and interactive forms, making it suitable for a wider range of applications and user interactions.

These benefits collectively highlight the potential of A2A to foster a more collaborative, efficient, and versatile AI landscape, particularly within enterprise environments where the ability for different AI systems to work together seamlessly is becoming increasingly critical.

3.6. Limitations and Challenges

While A2A presents a promising framework for agent interoperability, it is a relatively new protocol, and its ecosystem is still in its early stages of development compared to more established protocols like MCP. Some industry observers have raised questions about the extent to which A2A and MCP will truly be complementary in practice, suggesting a potential for overlap or even competition as both protocols evolve. The necessity of potentially needing to implement both MCP for individual agent capabilities and A2A for inter-agent communication could introduce additional complexity for developers and organizations. Furthermore, there might be initial confusion within the developer community regarding the precise delineation of roles and responsibilities between MCP and A2A. The reliance on the "Agent Card" as the primary mechanism for agents to discover each other's capabilities might require further enhancements to support more dynamic and nuanced interactions, as the current specification might not cover all the complexities of real-world agent interactions. As with any new technology, the widespread adoption and the growth of a robust ecosystem around A2A will be crucial factors in determining its long-term success and impact on the field of AI agents.

4. Comparative Analysis

4.1. Key Feature Comparison

The following table provides a summary of the key features of Anthropic's Model Context Protocol (MCP) and Google's Agent-to-Agent Protocol (A2A):

| Feature | MCP | A2A |

|---|---|---|

| Primary Focus | Connecting AI models to external data and tools | Communication and interoperability between AI agents |

| Core Architecture | Client-Server (Host-Client-Server) | Client-Server (Agent-to-Agent) |

| Key Components | Host, Client, Server, Resources, Tools, Prompts | Client Agent, Server Agent, Agent Card, Task, Message, Artifact |

| Communication Protocol | JSON-RPC 2.0 over stdio and HTTP with SSE | HTTP, JSON specification, SSE, Push Notifications |

| Scope | Individual AI agent's access to information and capabilities | Interaction and coordination between multiple AI agents |

| Intended Use Cases | Data retrieval, tool invocation, context enrichment for single agents | Multi-agent workflows, complex task delegation, cross-system collaboration |

| Security Focus | User consent for data access and tool execution | Secure exchange of information between agents |

| Modality Support | Primarily data and tool interaction | Text, forms, audio, video |

| Complementary/Competitive | Aims to provide the foundation for individual agent capabilities | Positioned as complementary, enabling collaboration between agents with MCP-enhanced capabilities |

4.2. Complementary vs. Competitive Nature

Google has explicitly stated that the Agent-to-Agent Protocol (A2A) is designed to complement Anthropic's Model Context Protocol (MCP). In this vision, MCP serves as the foundational layer, providing individual AI agents with the necessary tools and contextual information from external sources to effectively perform their tasks. Once these agents are equipped with the ability to access data and utilize tools through MCP, A2A then steps in to enable these capable agents to communicate, coordinate, and collaborate with other autonomous agents to achieve more complex, multi-faceted goals. Essentially, MCP can be viewed as the protocol that facilitates an agent's interaction with structured tools and data systems, while A2A governs the higher-level interactions and orchestration between intelligent agents.

To illustrate this complementary relationship, consider the analogy of a car repair shop. MCP would be the protocol that allows individual mechanic agents to interact with specific tools, such as raising a car on a platform or using a wrench of a particular size. On the other hand, A2A would be the protocol that enables communication between the customer and the shop employees (the agents), allowing the customer to explain the problem ("my car is making a rattling noise") and the agents to engage in a back-and-forth dialogue to diagnose and resolve the issue ("send me a picture of the left wheel," "I notice fluid leaking. How long has that been happening?"). A2A also facilitates communication between the shop employees and other relevant agents, such as parts suppliers.

Despite this clear delineation of intended roles, some perspectives within the industry suggest that there might be a potential for overlap or even competition between MCP and A2A in the long term. This could occur if one protocol evolves to incorporate functionalities that are currently the primary domain of the other. For instance, if MCP were to significantly enhance its capabilities for orchestrating multi-agent workflows, or if A2A were to expand its mechanisms for directly accessing and managing external data, the lines between their functionalities could become blurred. Ultimately, the practical relationship between MCP and A2A will likely be shaped by their ongoing development and the extent to which the developer community adopts and leverages each protocol. If both protocols achieve significant traction and establish clear niches, they could indeed form a powerful and comprehensive foundation for building the next generation of AI-powered systems. However, the potential for functional overlap will need to be carefully navigated to prevent fragmentation and ensure clarity for developers seeking to build sophisticated AI solutions.

4.3. Strengths and Weaknesses

MCP Strengths: Anthropic's Model Context Protocol exhibits several key strengths that contribute to its value proposition. It places a strong emphasis on empowering individual AI agents with the ability to access a diverse range of data sources and external tools, thereby significantly enhancing their capabilities and context awareness. MCP boasts well-defined technical specifications that provide a clear framework for developers, and it benefits from a growing ecosystem that includes readily available Software Development Kits (SDKs) and a collection of pre-built servers for popular services. A central tenet of MCP's design is its strong focus on security and ensuring user control over both data access and the execution of external tools, which is crucial for building trust and ensuring responsible AI interactions. Fundamentally, MCP addresses a critical need in the field by providing a standardized way to ground AI models in real-world data, moving beyond the limitations of their training datasets.

MCP Weaknesses: Despite its strengths, MCP also presents certain weaknesses and challenges. The requirement for stateful communication between clients and servers could potentially lead to difficulties in scaling applications and managing resources efficiently, especially under high load. There is also a potential for the context window of the underlying large language model to become overloaded when a large number of tools are integrated through separate MCP connections, which could negatively impact performance. The indirect nature of the interaction between the LLM and the tools, where the LLM does not directly execute the tools but rather instructs an intermediary client, might introduce complexity in some use cases. Furthermore, MCP's primary focus on local connections might limit its suitability for large-scale enterprise deployments that heavily rely on cloud-based infrastructure.

A2A Strengths: Google's Agent-to-Agent Protocol is specifically engineered to facilitate seamless and robust communication and collaboration between different AI agents, regardless of their underlying architecture or the vendor that created them. A notable strength of A2A is its support for diverse communication modalities that extend beyond simple text-based interactions, including the ability to handle forms, audio, and video, which can lead to richer and more versatile agent interactions. By building upon widely adopted web standards such as HTTP, JSON, and Server-Sent Events, A2A simplifies the process of integration with existing technological infrastructures and reduces the learning curve for developers. Ultimately, A2A empowers the creation of more sophisticated and autonomous multi-agent systems capable of tackling complex tasks through coordinated effort.

A2A Weaknesses: As a relatively new protocol, A2A's ecosystem is still in its nascent stages of development compared to more established standards. There is also a potential for overlap and confusion regarding the distinct roles and functionalities of A2A and MCP, especially given that they are both aiming to address challenges in the realm of AI agents. Developers might find that they need to implement both A2A and MCP to achieve comprehensive AI solutions, which could inadvertently increase the overall complexity of their systems.

5. Future Implications and Adoption Trends

The emergence of both Anthropic's Model Context Protocol (MCP) and Google's Agent-to-Agent Protocol (A2A) signifies a notable progression in the maturity of the AI agent landscape. These protocols represent a crucial step towards establishing standardized methodologies for integrating AI systems with the real world and for enabling effective collaboration between different AI entities. Increased adoption of these open standards has the potential to foster a more interconnected and efficient AI ecosystem, ultimately driving innovation and reducing the costs associated with developing and deploying sophisticated AI applications.

The ultimate success and widespread adoption of both MCP and A2A will heavily depend on the level of engagement and contribution from the developer community, the extent to which comprehensive tool support is developed, and the emergence of clear best practices and compelling use cases that demonstrate their value. Looking ahead, the potential for the emergence of "agent marketplaces" where pre-built and interoperable AI agents can be easily discovered, accessed, and utilized could further accelerate the adoption and integration of AI agents across various industries and applications. The dynamic interplay between MCP and A2A will be particularly significant, with developers likely finding ways to leverage the strengths of both protocols to construct more comprehensive and sophisticated AI solutions.

The overall trajectory suggests a future where standardization and interoperability become increasingly important characteristics of the AI agent ecosystem. Protocols like MCP and A2A are laying the essential groundwork for a more connected and collaborative AI landscape. The development of agent marketplaces could further democratize access to AI agent capabilities, making them more readily available to a wider range of users and organizations.

6. Conclusion

Anthropic's Model Context Protocol (MCP) and Google's Agent-to-Agent Protocol (A2A) represent significant advancements in the field of AI agents, each addressing critical challenges in the development and deployment of these intelligent systems. MCP provides a standardized and secure mechanism for individual AI agents to connect with a diverse array of external data sources and tools, thereby enhancing their context awareness and overall capabilities. Conversely, A2A focuses on enabling seamless and secure communication and collaboration between different AI agents, irrespective of their underlying frameworks or the vendors that created them. While Google has positioned A2A as a complementary protocol to MCP, the evolving relationship between these two standards and the potential for functional overlap will be important factors to observe as the AI agent ecosystem continues to mature. Ultimately, the widespread adoption and effective utilization of protocols like MCP and A2A have the potential to unlock a new era of more deeply integrated, highly interoperable, and remarkably powerful AI agent systems, driving significant innovation and efficiency gains across a multitude of industries and applications.